When Apple launched iOS 15 back in 2021, the updated software included a new feature in Photos called Visual Look Up. This neat AI-powered tool can recognize animals, plants, and landmarks in your photos, and provide information about them when tapped.

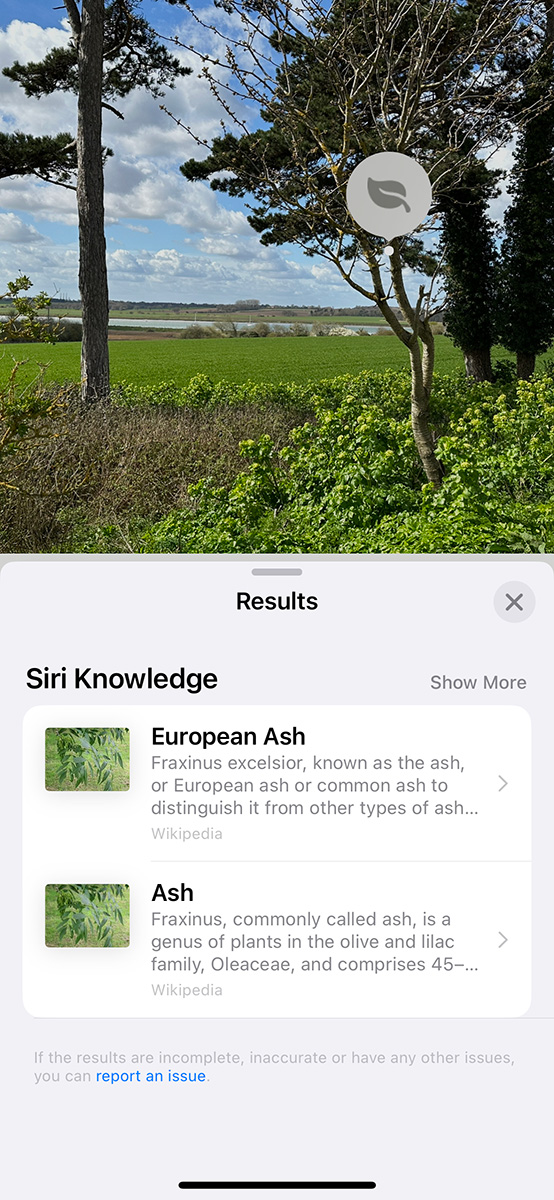

Here’s how it works: When you open a picture of some greenery in Photos, for example, you’ll notice a couple of small stars next to the information icon in the bottom bar, which means iOS has spotted something it recognizes. Tap the icon and you’ll quickly be told that the nearest tree is probably a European ash. Very handy, albeit within a relatively small number of spheres.

Foundry

But two years on, Apple is expanding the usefulness of this previously niche feature. iOS 17 and iPadOS 17, which were demoed at WWDC this month and will become widely available in the fall of this year, upgrade the Visual Look Up feature to add the ability to recognize various kinds of food and, perhaps most useful of all, symbols.

These upgrades were revealed during WWDC (they’re mentioned in our write-up of iPadOS 17 written on the same day) but beta testers are only now getting to grips with them. And it’s fair to say that they’re impressed.

Writing on Mastodon Wednesday, Federico Viticci from MacStories praised iOS 17’s performance interpreting laundry tags: “iOS 17’s Photos app can now identify and explain laundry symbols in pictures and this is amazing,” he wrote. “We need to take back whatever we all said about Apple not doing machine learning right.” (For what it’s worth, not all of us have been criticizing Apple’s machine-learning efforts.)

Laundry tags are difficult for humans, being small, often faded, and cryptic or even counterintuitive in design. But they’re the perfect use case for computer AI, being largely consistent within tight visual parameters and essentially dependent on simply remembering what each one means; there’s very little room for subjective interpretation. And accordingly, Visual Look Up happily tells Viticci that the proffered garment should not be tumble-dried (too late, apparently) or dry-cleaned.

MacRumors has done some more testing of its own and further notes that you can click on the provided information to find out where it comes from. The laundry instructions come ultimately, the site reports, from the International Organization for Standardization.

The feature, according to MacRumors, is already reliable at this stage of beta testing, appearing to successfully identify every laundry symbol the site tried–although it does note that images had to be zoomed in quite far for Visual Look Up to work. And the feature can recognize symbols on car dashboards too, MacRumors has discovered. That’s an upgrade that wasn’t mentioned during the keynote.

While laundry tags may be a small upgrade, it speaks to the kind of work Apple is putting into Visual Look Up to make it a feature we’ll all use more often. And of course, it’s a feature that could be a key component of the Vision Pro headset once it becomes a device that’s meant to be worn outside.

For more information about the new features coming to an iPhone near you, check out our complete guide to iOS 17.