AI is today’s buzzword. And if you have an iPhone, Apple Intelligence is buzzing all over. The iPhone 16 is the first phone built with Apple Intelligence in mind, and whether you’re buying one this week or not, it’s hard to escape it.

Back in June, when Apple previewed its AI suite coming to compatible devices next month I was confident that the company would reserve exclusive Apple Intelligence features for the iPhone 16. After all, the ever-expanding Google Pixel line just unveiled a ton of AI features that are surely appealing to certain iOS users, so I expected Apple would surely have some exclusive AI tricks up its sleeve to keep them from jumping ship. Boy, was I wrong.

Nothing new

If you’re looking for a comprehensive list of exclusive iPhone 16 AI perks, there’s just one: Visual Intelligence. The tool, which won’t be available at launch like the rest of Apple Intelligence, is activated using the new Camera Control button to allow users to scan an object or place to take a relevant action or learn more about it.

For example, you could point your iPhone 16’s camera at a dog to identify its breed. Similarly, you could check a restaurant’s OpenTable listing after scanning its sign, or add an event to your calendar based on a concert poster’s details. There’s also an integrated math-solving utility that relies on ChatGPT’s smarts to help students with their assignments.

While Visual Intelligence is undoubtedly a welcome addition, it’s too underwhelming compared to rivaling companies’ progress in the AI department. Google Lens operates similarly and has existed on all relevant smartphones for years. In fact, iPhone 15 Pro users can already map the Action button to trigger the feature if they’ve installed the official Google app. So, Apple cloning and offering it as the sole exclusive AI feature is disappointing—to say the least.

Apple Intelligence is built into the iPhone 16—but most of the features won’t be available until next year.

YouTube / Apple

A tale of two intelligences

Perhaps the most significant difference between Google’s and Apple’s philosophies is, to use one of Apple’s iconic words, courage. The former company is infamous for developing and putting out all sorts of wild features, then randomly killing them—presumably based on internal metrics. Google isn’t necessarily afraid of releasing a bad product. It tries, adapts accordingly, and moves on when it needs to.

Apple, on the other hand, has a reputation to maintain. The firm is known for running extensive experiments internally rather than using its user base as alpha testers. As a result, it typically comes up with reliable solutions that are either late or lacking when juxtaposed with those of other brands. They tend to be mild and stable, however, and iPhone users appreciate that. But with Apple Intelligence, it seemed like Apple was willing to take chances to catch up with Google and Samsung, starting with the iPhone 16.

That didn’t happen. The Pixel phones are packed with AI features that truly enhance the phone’s best feature, the camera. You can repaint an object in a photo (Reimagine), change the background (Magic Editor), add yourself to a group photo (Add Me), minimize pixelation in zoomed shots (Zoom Enhance), stitch several shots into one (Best Take), etc. That’s not to mention the dual exposure video feature that maintains accurate colors throughout the frame. These options truly give users pretty much full control over their camera output, allowing them to come up with some creative and more enhanced results. I then look at the pitiful iPhone, which only supports removing intrusive objects with Clean Up when it comes to AI photo edits.

The Pixel feels more like an AI phone than the iPhone 16.

Beyond media-related features, the recent Google Pixel phones can also perform some neat tasks using AI. These include answering the phone and responding to calls on users’ behalf, having more natural conversations with Gemini, and so on. It really feels like a true AI smartphone rather than a smartphone with a sprinkle of perfunctory AI on top.

Apple Intelligence isn’t AI-nough

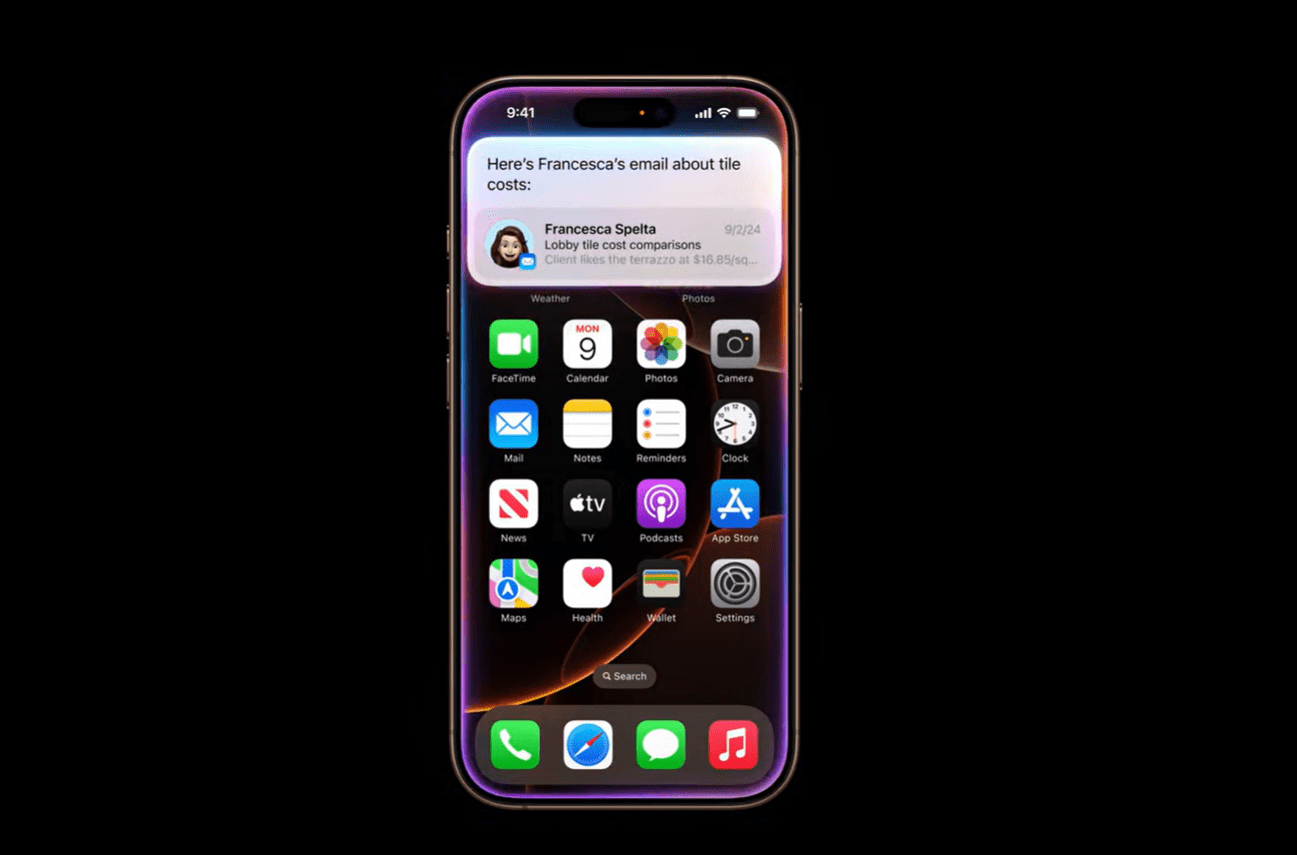

All of this isn’t to say Apple Intelligence isn’t a great start. Writing Tools in the iOS 18.1 beta has been proofreading my articles for weeks now. And AI on the iPhone also skillfully summarizes notifications, emails, messages, and articles on the web, all useful features that perfectly complement my text-based workflows, and most of them work offline.

It’s also worth noting that Apple Intelligence is free, while some of Google’s AI features require a Gemini Advanced subscription (or will once the Pixel 9 grace period runs out). In Google’s defense, though, analysts suggest that Apple could start charging for new AI features as well in a few years, once its product matures. And given the complexity of Google’s current AI features, the fee is arguably justified and plenty of Gemini’s great features are free.

The Pixel 9 is loaded with useful AI features.

Dominik Tomaszewski / Foundry

Apart from Apple Intelligence being significantly less intelligent than its Google counterpart, the full feature set won’t debut until mid-2025. So, while Apple is already advertising the smarter Siri that can pull user data from apps, the feature won’t actually arrive until next year. In fact, iPhone 16 users won’t get any Apple Intelligence features before next month, with the most exciting image-generation features coming after that. Google’s Pixel features are all available now.

Apple is trying to sell a promise for an iPhone that is objectively less advanced than its present rivals, and that’s not a good look. It’s all symptoms of scrambling to keep up with the fast-paced AI race. The company has been boasting about Siri’s improved smarts at events every few years, and yet it still continues to fail at executing the most basic tasks on my end. What guarantees the upcoming Siri won’t be as bad?

Apple had a chance to put Google and the rest of the AI world on notice with the iPhone 16. Instead, it only showed how much Apple Intelligence still needs to learn.