What is Apple Intelligence?

Apple Intelligence combines the content creation and generation capabilities of AIs like chatGPT, merging them with Siri’s interaction and automation capabilities.

What does this mean? You can use chatGPT to generate a birthday message for a loved one, and Siri can schedule it to be sent at the right time, directly via the app of your choice. On its own, chatGPT cannot do this.

Unlike other approaches by the competitor, Galaxy AI (review) included, which relies heavily on Google’s cloud, Apple wants to enable you to perform as many tasks as possible locally on your device. Apple Intelligence offers three levels to do so:

- Locally, directly, and only on your iPhone.

- Via the secure Apple Private Cloud Compute: Apple’s servers used only for the function you want, without any data stored on them. Apple itself has no way of accessing your personal data.

- Via third-party AIs such as chatGPT: Apple enables the use of chatGPT in its secure environment for free, without the need for an OpenAI account. Apple even wants to give you the choice between several AIs in the future and not just chatGPT.

Whether you sort your emails by priority, organize your photos in a memory album, or compile your texts, your data won’t end up in some cloud.

When will Apple Intelligence arrive and on which models?

Since these local actions require an enormous amount of processing muscle, Apple is limiting the compatibility of Apple Intelligence to its latest iPhones, iPads, and Macs with the latest SoCs. Hence, will you need a MacBook or iPad with at least an SoC M1 or an iPhone with the SoC A17 Pro or better.

At the iPhone 16 launch, Apple revealed its roadmap for the launch of Apple Intelligence. When the new iPhones are delivered on September 20, iOS 18 will be installed on the devices, but Apple Intelligence will not be present. The AI platform will eventually debut in October with iOS 18.1 via a free software update (alongside iPadOS 18.1 and macOS Sequoia 15.1) in a beta version.

Which hardware can look forward to powering Apple Intelligence? Last year’s Pro iPhones and the entire new iPhone series are included, as are iPads and Mac models with M1 or newer chipsets. However, many of the features already presented will only be added later, although Apple did not explicitly mention when the select features will arrive.

However, we expect writing tools for text summaries, Siri’s iPhone tutorials, and the Cleanup tool, among others, to be available in October, while the image processing tools, for instance, will probably not follow until December.

Speaking of “later”: Apple Intelligence is initially only available in US English. Adapted English versions for Australia, Canada, New Zealand, South Africa, and the UK will follow at the end of the year. New languages will be available in 2025, including support for Chinese, French, Spanish, and Japanese as explicitly mentioned at the Apple event. If you happen to speak another language such as German, you may have to wait for a little longer.

The best Apple Intelligence features for your iPhone

A new Siri that understands context

Siri not only looks better, is easier to understand, and more natural, it has now gained the ability to read and write! You can choose whether you want to interact by voice or enter text. What’s more, Siri is now smart enough to recognize when you stumble over your own words and can still provide the right answers.

Basically, Siri will no longer perform a limited number of actions only. You can interact with Apple’s assistant like you would with chatGPT. In other words, using prompts such as in a chat. Siri will understand the context of your request, analyze it, and perform the appropriate actions as automatically and intuitively as possible.

A completely new design

Siri has been given a completely new design and now appears in an elegant, shiny light that wraps around the edge of your screen.

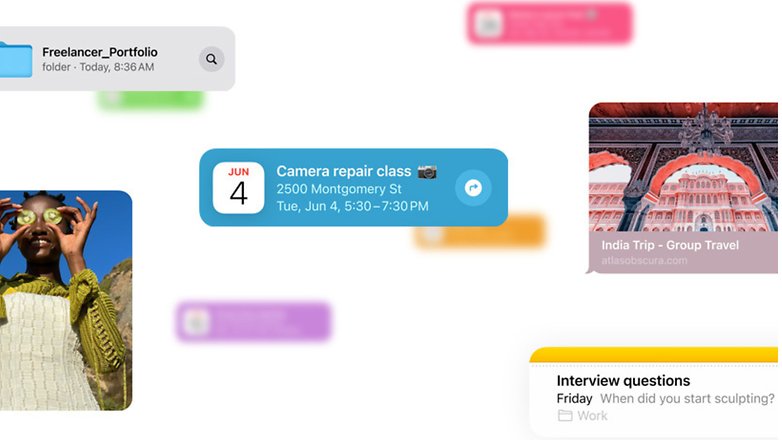

Onscreen awareness

With Apple Intelligence, Siri understands what is happening on the screen and can interact with the elements displayed. If a friend sends you their new address by text message, you can say: “Add this address to their contact card” and Siri will take care of it. Contextual actions that are adapted to what is happening on your screen are the result.

Tutorials from Apple and for Apple

Don’t know how to use a function? Perhaps you want to change something in the settings without knowing how to do so? You can benefit from Siri’s extensive knowledge of the functions and settings of your devices. You can ask questions to learn something new on your iPhone, iPad, and Mac, and Siri can give you step-by-step instructions.

You no longer have to talk to Siri

If you double-tap the bottom of the screen on your iPhone or iPad, you can write to Siri from anywhere in the system if you don’t want to speak out loud. This is handy for sending her prompts without people around hearing your instructions.

More natural conversations with Siri

Siri now has better speech understanding and an improved voice, making conversation more natural. When you refer to something you mentioned in a previous request, such as the location of a calendar event you just created, and ask, “What will the weather be like there?”, Siri knows what you’re talking about.

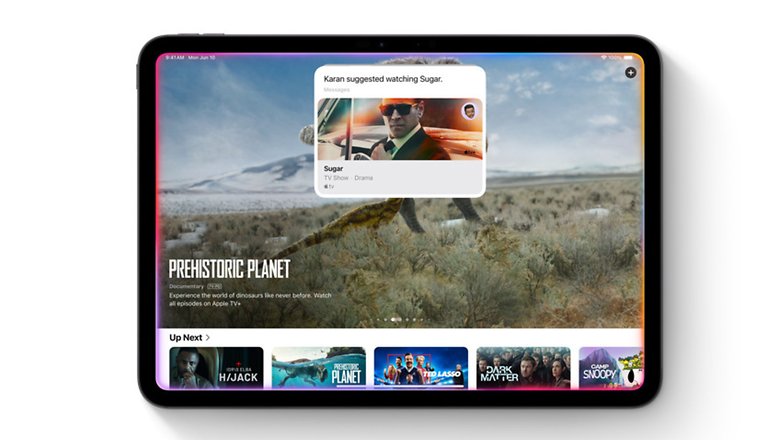

Siri can create content and use it across multiple applications

Siri can now operate seamlessly within and between apps. You can make a request like “Send the email I wrote to Jean-Michel and Marie-Eudes” and Siri will know which email you mean and which app it’s in.

You can also combine several functions to create content and interactions between different apps. For example, you can record a voice note in Notes. Siri will summarize it for you and then email it to one or more specific contacts.

Siri remembers your personal background

If Siri knows your personal context, it can help you in a way that is tailored to you. If can’t remember whether a friend shared this recipe with you in a note, text message, or email, Siri uses your knowledge of the information on your device to help you find what you’re looking for without violating your privacy.

Apple Private Cloud Compute

During the keynote, Apple repeatedly emphasized the important role of data protection, where Siri performs many tasks locally and it will now be even more integrated into the overall system.

If an action is not performed locally, you will be asked beforehand whether the data may be sent to special servers that run on Apple silicon chips where it is not stored but only used for this specific action.

Apple calls this Private Cloud Compute and wants to make it a new standard. To prevent your data from being misused, the Cupertino-based company explained this data protection promise can be verified by independent experts.

Integration of chatGPT

Apple has secured the services of OpenAI, more specifically GPT-4o. Apple wrote in the press release:

In addition, access to chatGPT is integrated into Siri and the system’s writing tools on all Apple platforms. This allows users to access the knowledge and skills to understand images and documents without having to switch between tools.

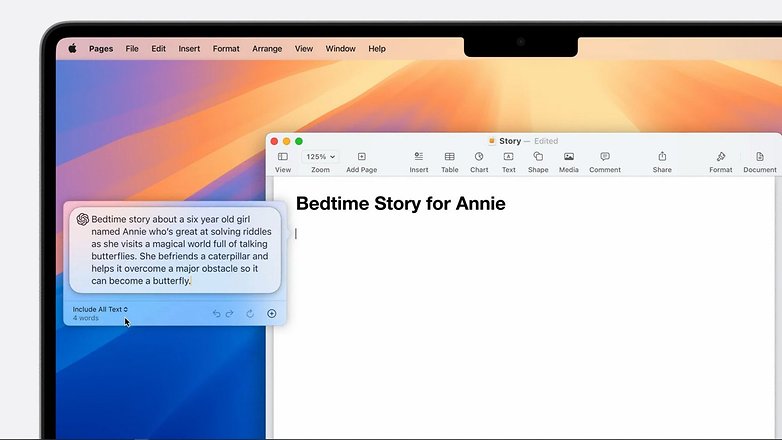

If Siri does not have an answer to a question, it offers you to ask ChatGPT without having to launch the application. ChatGPT can also, for instance, analyze a photo or PDF file directly from Siri and forward it if required. ChatGPT can also generate text from nothing in any application with a prompt.

We still have to be patient, as chatGPT integration has been slated for release “later this year”. Siri and ChatGPT will therefore work together and complement Apple Intelligence to use the connection to the cloud when the local options are insufficient.

Apple showed an example where a story was generated for a young child, with corresponding images. An example was also shown in which ChatGPT indicated which dish could be prepared based on the ingredients in a picture.

It’s also good to know that you don’t need an OpenAI account to use ChatGPT on your iPhone, making the entire thing free.

Image Playground

Apple Intelligence will also offer the Image Playground function, which you can use to generate images. You can choose between illustrations, animations, and sketches. You can use this function directly in messages or as a stand-alone app.

You can create images in no time at all by specifying various parameters. For example, if you enter the name of your chat partner, a birthday cake, and a pirate ship, you will receive a drawing of your conversation partner that represents exactly what you want. Image Playground will therefore be similar to what other AIs like Dall-E or Midjourney offer.

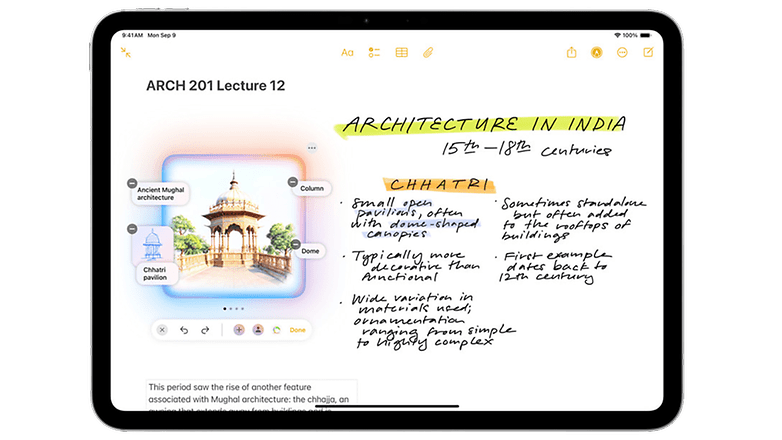

Image Wall

Image Wand was designed to make notes more visually appealing by turning your rough sketches into attractive images. If you circle an empty area, Image Wand creates an image from the context of the surroundings.

Genmoji

With Genmoji, Apple wants to take the emoji experience even further. From the emoji keyboard, it will be possible to generate a customized emoticon with a sentence (in the “Create new emoji” section). Just like emojis, genmojis can also be added to messages or shared as stickers or reactions.

This feature was designed to make sharing more fun: you can create a Genmoji of your friends and family from their photos. You can even have Genmojis that express exactly what you want to express, which is something emojis aren’t always good at.

Good

- Titanium case is elegant and robust

- More compact and lighter than the iPhone 14 Pro

- A17 Pro is faster and has impressive graphics performance

- New triple camera with 48 MP

- USB-C port offers countless possibilities

- Very practical action button

Bad

- Relatively slow charging despite USB-C

- Action button partly immature

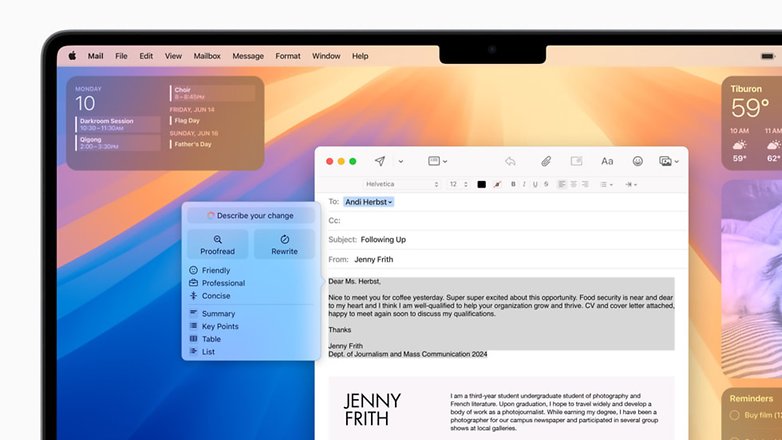

Rewrite

With this feature, you can edit text in any app by simply highlighting it and clicking a small colored button. You can change the tone of a text (friendly, professional, or concise), correct spelling and grammatical errors, summarize a text with a bullet list, or create a table of contents. In all these cases, Apple Intelligence will explain its modifications.

Clean Up

Similar to Android’s Magic Eraser function, you can use Apple Photos’ Clean Up function to tidy up your photos. Is someone in a photo bothering you? You can make it disappear using a generative template. Clean Up can also remove shadows and reflections by using advanced image understanding.

Memories

Apple offers the possibility to create a customized reminder video with a prompt. Simply write a sentence with wishes and you will receive a small compilation within seconds. You can’t edit the video with AI, but it will be possible to customize it manually. Apple Intelligence will also automatically suggest a title.

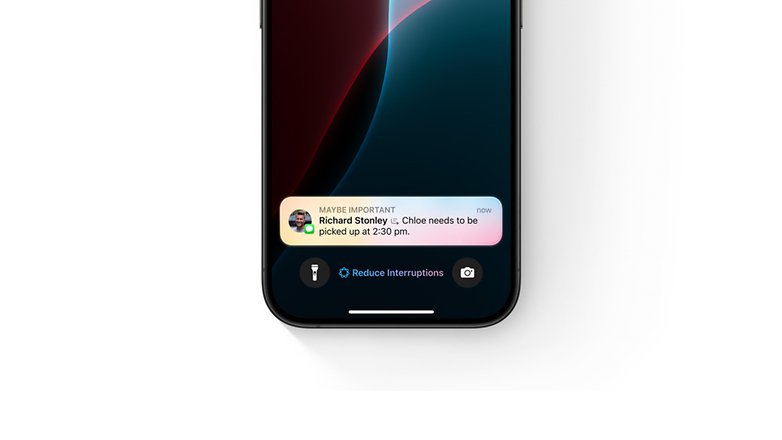

Prioritized notifications

Prioritized notifications now appear at the top of the stack so you can see what you need to pay attention to at a glance. They are also grouped together for you to an analyze them faster without having to expand them by tapping.

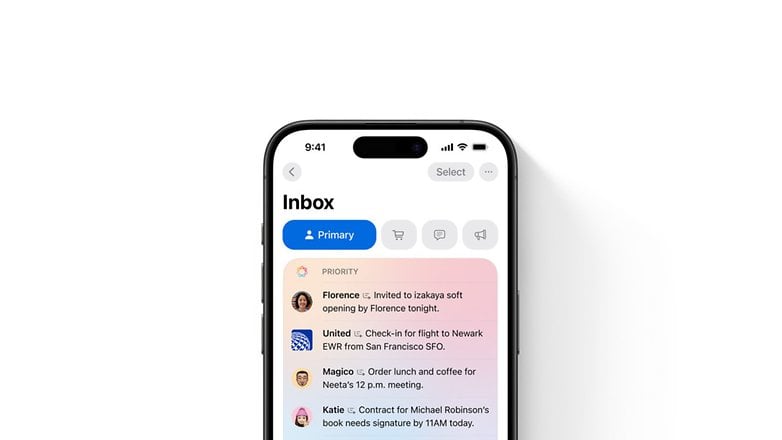

Priority Messages

This feature places urgent messages at the top of your inbox, such as an invitation with a deadline for today or a reminder to check in for your flight this afternoon.

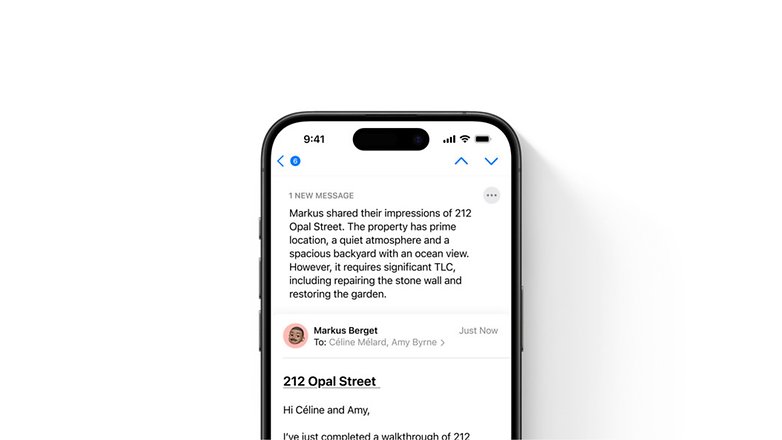

Summary

Simply tap on the summary of a long email in the Mail app and you’ll get the most important information in a nutshell. You can also view email summaries directly in your inbox.

Voice recording in the Notes and Phone apps

Simply tap Record in the Notes or Phone app to make audio recordings and play them. Apple Intelligence creates summaries of your notes so that you have the most important information at a glance.

Fewer interruptions

There’s a new Concentration Mode that understands the content of your notifications and shows you which notifications require immediate attention, such as a reminder to pick up your child from daycare later in the day.

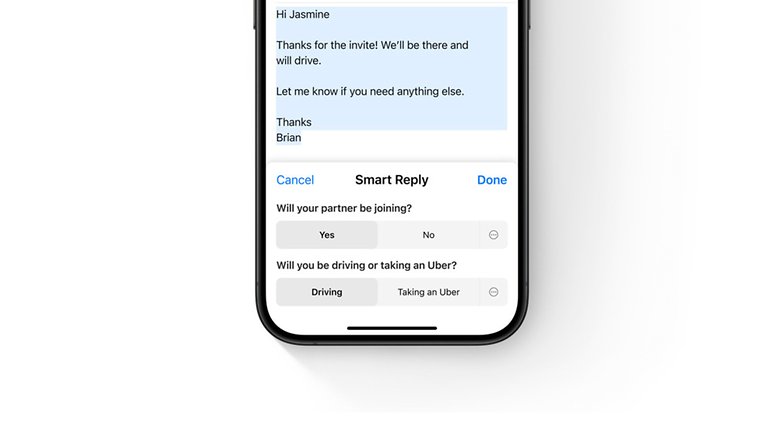

Smart Reply

Smart Reply allows you to use an automatic, appropriate reply in Mail to quickly reply an email with all the necessary details. Apple Intelligence can identify the questions asked in an email and suggest a relevant selection for your reply. With just a few clicks, you are then ready to send a reply containing answers to the most important questions.

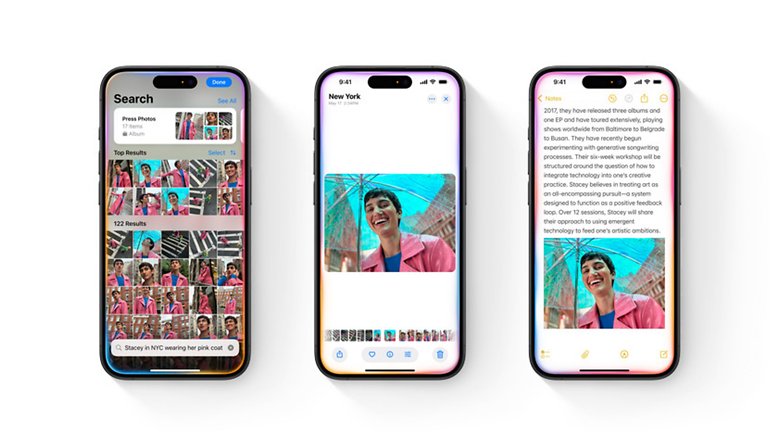

Search for photos and videos in your gallery

If you simply describe what you’re looking for in the Photos app, Apple Intelligence can find a specific moment in a video clip that matches your search description and take you straight there.

What do you think of Apple’s approach to artificial intelligence? What is your favorite Apple Intelligence feature? Would you replace your old Apple device to be able to use Apple Intelligence? What do you think about the fact that only a few functions are initially available?