The latest Ray-Ban glasses from Meta are equipped with an integrated camera and manage the balancing act between fashion and technology. The cool design and hardware are exciting for content creators. However, an uneasy feeling and questions remain: What happens to the images and videos that the glasses record? How does Meta use this data?

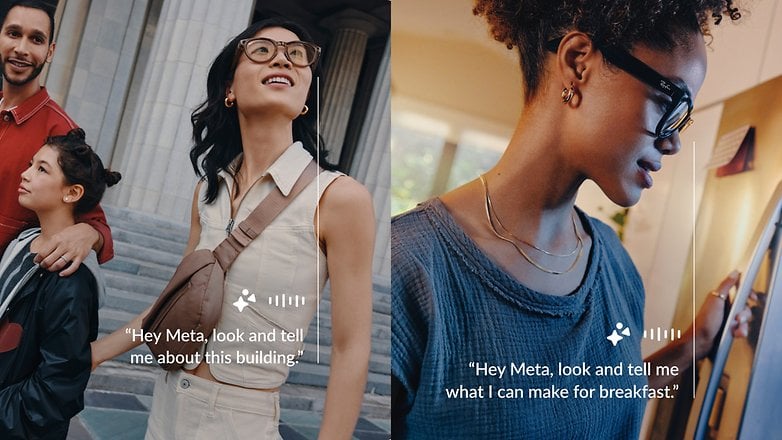

Meta, the parent company behind Facebook, Instagram, and WhatsApp, is known for developing innovative technologies that change the way we communicate and interact — and pushing boundaries. With its latest pair of glasses which were developed by collaborating with Ray-Ban, the company aims to create real added value through artificial intelligence in everyday life. These glasses can also use image data from the environment to provide contextual information or recommendations.

The dark side of data processing

One of the key questions that arises is this: Where is most of the image data processed? The answer: due to a lack of locally available computing power, image data is not analyzed on the device itself, but on Meta’s cloud servers. This naturally enables far more spectacular features, but it also raises concerns about data protection practices.

Information sent to the cloud is not simply evaluated, but also used to train AI models and therefore, remains stored in some way. A paper where Google Deepmind was involved showed why this can be problematic. Researchers succeeded in extracting training data from an AI model from OpenAI through clever prompting.

Image data as AI training material

Meta has now confirmed with Techcrunch that image and video data will be used to train Meta AI but only if the users actually use the in-house Meta AI. This should only apply to recordings that users have analyzed via the AI. However, what sounds clear and transparent in Meta’s official statement will probably be hidden in lengthy ToS and complicated menus in reality, and this would not be the first time such a thing happened.

Of course, this currently only applies to the USA and Canada, as the AI features are not yet available elsewhere. The strict data protection regulations in Europe will lead to higher requirements, but valid questions remain: How can it really ensure that data, once released, is not misused? How can it determine in practice what actually ends up in the cloud and what doesn’t?

Meta’s responsibility

Meta has the responsibility to transparently communicate how data processing works and how our data is protected. At a time when privacy has become a key concern, users need to be educated and empowered to make informed decisions about how and when they share their data. The continued integration of technology into our daily lives should not come at the expense of our privacy.

In a world where technology and lifestyle are increasingly merging, it is crucial for users to keep a critical eye on the products used. The Ray-Ban glasses from Meta are just the beginning of a new era where data and personal information play a key role, not to mention glasses were designed to share more private data than ever before.