Key Takeaways

If you see the “Too many files open” error message in Linux, your process has hit the upper limit of files it’s allowed to open, usually 1,024. You can temporarily increase the limit to, for example, 2,048 files, with the command “ulimit -n 2048”. Increase the limit permanently by editing systemd configuration files.

On Linux computers, system resources are shared amongst the users. Try to use more than your fair share and you’ll hit an upper limit. You might also bottleneck other users or processes.

What Is the Too Many Open Files Error?

Amongst its other gazillion jobs, the kernel of a Linux computer is always busy watching who’s using how many of the finite system resources, such as RAM and CPU cycles. A multi-user system requires constant attention to make sure people and processes aren’t using more of any given system resource than is appropriate.

It’s not fair, for example, for someone to hog so much CPU time that the computer feels slow for everyone else. Even if you’re the only person who uses your Linux computer, there are limits set for the resources your processes can use. After all, you’re still just another user.

Some system resources are well-known and obvious, like RAM, CPU cycles, and hard drive space. But there are many, many more resources that are monitored and for which each user — or each user-owned process — has a set upper limit. One of these is the number of files a process can have open at once.

If you’ve ever seen the “Too many files open” error message in a terminal window or found it in your system logs, it means that the upper limit has been hit, and the process is not being permitted to open any more files.

Why Are So Many Files Opening?

There’s a system-wide limit to the number of open files that Linux can handle. It’s a very large number, as we’ll see, but there is still a limit. Each user process has an allocation that they can use. They each get a small share of the system total allocated to them.

What actually gets allocated is a number of file handles. Each file that is opened requires a handle. Even with fairly generous allocations, system-wide, file handles can get used up faster than you might first imagine.

Linux abstracts almost everything so that it appears as though it is a file. Sometimes they’ll be just that, plain old files. But other actions such as opening a directory uses a file handle too. Linux uses block special files as a sort of driver for hardware devices. Character special files are very similar, but they are more often used with devices that have a concept of throughput, such as pipes and serial ports.

Block special files handle blocks of data at a time and character special files handle each character separately. Both of these special files can only be accessed by using file handles. Libraries used by a program use a file handle, streams use file handles, and network connections use file handles.

Abstracting all of these different requirements so that they appear as files simplifies interfacing with them and allows such things as piping and streams to work.

You can see that behind the scenes Linux is opening files and using file handles just to run itself — never mind your user processes. The count of open files isn’t just the number of files you’ve opened. Almost everything in the operating system is using file handles.

How to Check File Handle Limits

The system-wide maximum number of file handles can be seen with this command.

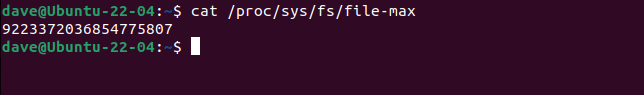

cat /proc/sys/fs/file-max

This returns a preposterously large number of 9.2 quintillion. That’s the theoretical system maximum. It’s the largest possible value you can hold in a 64-bit signed integer. Whether your poor computer could actually cope with that many files open at once is another matter altogether.

At the user level, there isn’t an explicit value for the maximum number of open files you can have. But we can roughly work it out. To find out the maximum number of files that one of your processes can open, we can use the ulimit command with the -n (open files) option.

ulimit -n

And to find the maximum number of processes a user can have we’ll use ulimit with the -u (user processes) option.

ulimit -u

Multiplying 1024 and 7640 gives us 7,823,360. Of course, many of those processes will be already used by your desktop environment and other background processes. So that’s another theoretical maximum, and one you’ll never realistically achieve.

The important figure is the number of files a process can open. By default, this is 1024. It’s worth noting that opening the same file 1024 times concurrently is the same as opening 1024 different files concurrently. Once you’ve used up all of your file handles, you’re done.

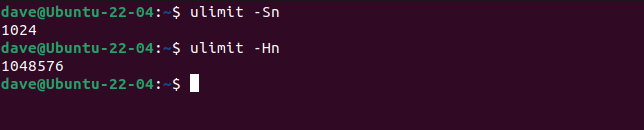

It’s possible to adjust the number of files a process can open. There are actually two values to consider when you’re adjusting this number. One is the value it is currently set to, or that you’re trying to set it to. This is called the soft limit. There’s a hard limit too, and this is the highest value that you can raise the soft limit to.

The way to think about this is the soft limit really is the “current value” and the upper limit is the highest value the current value can reach. A regular, non-root, user can raise their soft limit to any value up to their hard limit. The root user can increase their hard limit.

To see the current soft and hard limits, use ulimit with the -S (soft) and -H (hard) options, and the -n (open files) option.

ulimit -Sn

ulimit -Hn

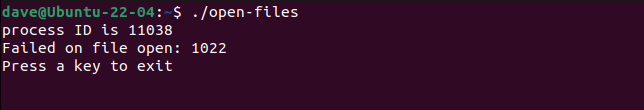

To create a situation where we can see the soft limit being enforced, we created a program that repeatedly opens files until it fails. It then waits for a keystroke before relinquishing all the file handles it used. The program is called open-files.

./open-Files

It opens 1021 files and fails as it tries to open file 1022.

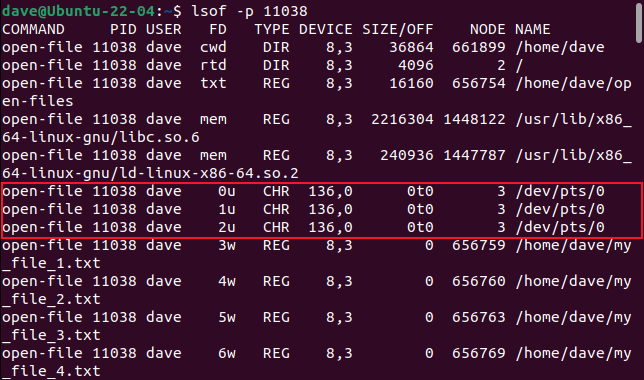

1024 minus 1021 is 3. What happened to the other three file handles? They were used for the STDIN, STDOUT, and STDERR streams. They’re created automatically for each process. These always have file descriptor values of 0, 1, and 2.

We can see these using the lsof command with the -p (process) option and the process ID of the open-filesprogram. Handily, it prints its process ID to the terminal window.

lsof -p 11038

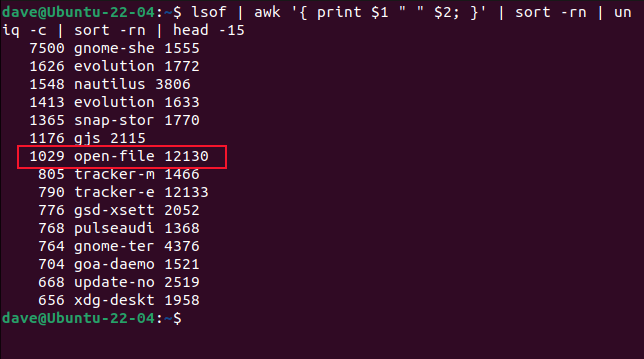

Of course, In a real-world situation, you might not know which process has just gobbled up all the file handles. To start your investigation you could use this sequence of piped commands. It’ll tell you the fifteen most prolific users of file handles on your computer.

lsof | awk '{ print $1 " " $2; }' | sort -rn | uniq -c | sort -rn | head -15

To see more or fewer entries adjust the -15 parameter to the head command. Once you’ve identified the process, you need to figure out whether it has gone rogue and is opening too many files because it is out of control, or whether it really needs those files. If it does need them, you need to increase its file handle limit.

How to Increase the Soft Limit

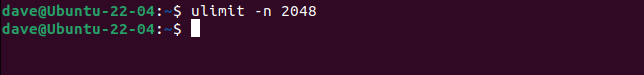

If we increase the soft limit and run our program again, we should see it open more files. We’ll use the ulimit command and the -n (open files) option with a numeric value of 2048. This will be the new soft limit.

ulimit -n 2048

This time we successfully opened 2045 files. As expected, this is three less than 2048, because of the file handles used for STDIN , STDOUT , and STDERR.

How to Permanently Change the File Limit

Increasing the soft limit only affects the current shell. Open a new terminal window and check the soft limit. You’ll see it is the old default value. But there is a way to globally set a new default value for the maximum number of open files a process can have that is persistent and survives reboots.

Out-dated advice often recommends you edit files such as “/etc/sysctl.conf” and “/etc/security/limits.conf.” However, on systemd-based distributions, these edits don’t work consistently, especially for graphical log-in sessions.

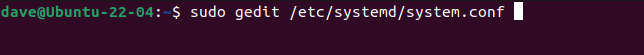

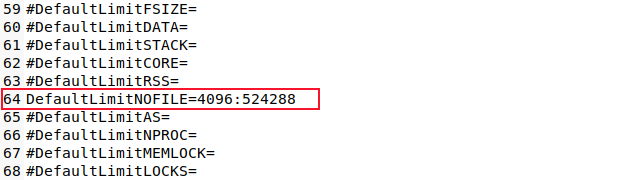

The technique shown here is the way to do this on systemd-based distributions. There are two files we need to work with. The first is the “/etc/systemd/system.conf” file. We’ll need to use sudo .

sudo gedit /etc/systemd/system.conf

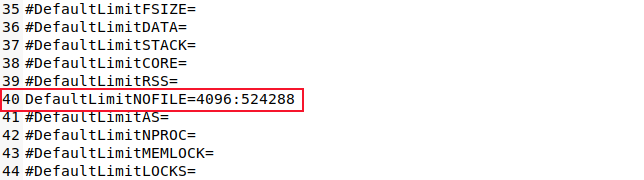

Search for the line that contains the string “DefaultLimitNOFILE.” Remove the hash “#” from the start of the line, and edit the first number to whatever you want your new soft limit for processes to be. We chose 4096. The second number on that line is the hard limit. We didn’t adjust this.

Save the file and close the editor.

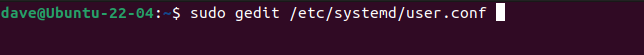

We need to repeat that operation on the “/etc/systemd/user.conf” file.

sudo gedit /etc/systemd/user.conf

Make the same adjustments to the line containing the string “DefaultLimitNOFILE.”

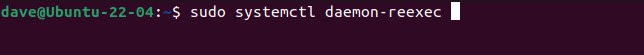

Save the file and close the editor. You must either reboot your computer or use the systemctl command with the daemon-reexec option so that systemd is re-executed and ingests the new settings.

sudo systemctl daemon-reexec

Opening a terminal window and checking the new limit should show the new value you set. In our case that was 4096.

ulimit -n

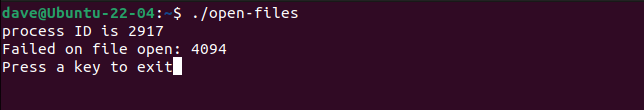

We can test this is a live, operational value by rerunning our file-greedy program.

./open-Files

The program fails to open file number 4094, meaning 4093 were files opened. That’s our expected value, 3 less than 4096.

Remember That Everything is a File

That’s why Linux is so dependent on file handles. Now, if you start to run out of them, you know how to increase your quota.