A relatively new trend in facial recognition technology uses a 3D model, which claims to provide more accuracy.

Capturing a real-time 3D image of a person’s facial surface, 3D facial recognition identifies the subject by using distinctive features of the face — where rigid tissue and bone is most apparent, such as the curves of the eye socket, nose and chin. These areas are all unique and don’t change significantly over time.

Using depth and an axis of measurement that is not affected by lighting, 3D facial recognition can even be used in darkness and has the ability to recognize a subject at different view angles with the potential to recognize up to 90 degrees (a face in profile).

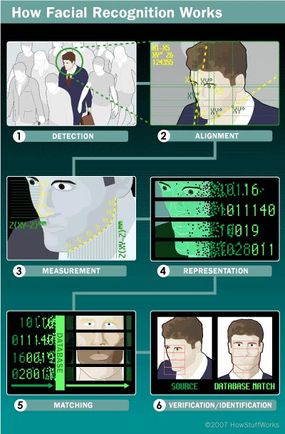

Using the 3D software, the system goes through a series of steps to verify the identity of an individual.

Detection

Acquiring an image can be accomplished by digitally scanning an existing photograph (2D) or by using a video image to acquire a live picture of a subject (3D).

Alignment

Once it detects a face, the facial recognition system determines the head’s position, size and pose. As stated earlier, the subject has the potential to be recognized up to 90 degrees, while with 2D, the head must be turned at least 35 degrees toward the camera.

Measurement

The system then measures the curves of the face on a sub-millimeter (or microwave) scale and creates a template.

Representation

The system translates the template into a unique code. This coding gives each template a set of numbers to represent the features on a subject’s face.

Matching

If the image is 3D and the database contains 3D images, then matching will take place without any changes being made to the image. However, there is a challenge currently facing databases that are still in 2D images.

3D provides a live, moving variable subject being compared to a flat, stable image. New facial recognition technology is addressing this challenge. When a 3D image is taken, different points (usually three) are identified.

For example, the outside of the eye, the inside of the eye and the tip of the nose will be pulled out and measured. Once those measurements are in place, an algorithm (a step-by-step procedure) will be applied to the image to convert it to a 2D image.

After conversion, the software will then compare the image with the 2D images in the database to find a potential match.

Verification or Identification

In verification, an image is matched to only one image in the database (1:1). For example, an image taken of a subject may be matched to an image in the Department of Motor Vehicles database to verify the subject is who he says he is.

If identification is the goal, then the image is compared to all images in the database, resulting in a score for each potential match (1:N). In this instance, you may take an image and compare it to a database of mug shots to identify who the subject is.

Next, we’ll look at how skin biometrics can help verify matches in facial recognition technology.