iOS 18.2 will bring exciting new Apple Intelligence features like Image Playground, Genmoji, Visual Intelligence and ChatGPT integration. These flashy new tools let users create images and custom emoji, look up information using the iPhone’s camera and tap into one of the hottest AI chatbots around.

How well do they work? Keep reading or watch our hands-on video to see iOS 18.2’s new Apple Intelligence features in action.

Hands-on with more Apple Intelligence features in iOS 18.2

Apple Intelligence is the overall name for a suite of AI tools that Cupertino began rolling out in October. That first round of Apple Intelligence features brought some basics, including Writing Tools, system-wide summarizations, a new Siri user interface and the Clean Up tool for eliminating unwanted elements from photos.

The second wave of features, which should arrive next month in iOS 18.2 (currently in developer beta), ups the ante on Apple Intelligence. They deliver the kind of generative tools that have been making waves in the tech world in recent years — at great controversy. The all-new, smarter Siri likely won’t arrive until iOS 18.4 in April 2025, but this update has a few small features for developers.

Table of contents: Hands-on with more Apple Intelligence in iOS 18.2

- Image Playground

- Image Wand

- Genmoji

- Visual Intelligence

- ChatGPT in Siri

- Improved writing tools

- New Siri APIs

Image Playground

Screenshot: D. Griffin Jones/Cult of Mac

The new Image Playground app lets you generate AI images from scratch. It provides a few different ways to create images. To get started, open Image Playground and tap the + at the bottom of the screen. (You also can find Image Playground inside Apple’s Messages app, in the app menu.)

Image Playground gives you four ways to create an AI image:

- Type in a text prompt by tapping Describe an image and Image Playground will create an image based on your description.

- Tap the Choose Person button in the bottom right to pick a photo of a person you know. (It pulls from the “People and Pets” section of your Photos library, so you should make sure you tag everyone first.) You also can tap the Appearance button and tap Edit to generate a person from scratch.

- Tap the Choose Style or Photo button (with a + icon) then tap Choose Photo to pick any photo in your library as a starting point. Here, you can switch between Animation and Illustration styles.

- Finally, you can tap any of the suggestion bubbles along the bottom of the screen.

After you pick a starting image, Image Playground will begin generating a few options. It makes four at a time. You can swipe over to generate more.

Not satisfied? You can add more and more tags to tweak the image. Tap a button to add one of Apple’s preset tags with themes, costumes, accessories and places. Or you can type in additional descriptions from the text box. Tap on any of the floating bubbles to remove one of the prompts.

When you’re finished, tap Done in the upper right to save the image you created. You can share it, delete it or go back and edit it at any time. You also can give it a thumbs-up or thumbs-down to send feedback to Apple.

How good do the Image Playground images look?

Screenshot: D. Griffin Jones/Cult of Mac

Personally, I’m not impressed with this highly touted Apple Intelligence feature. The images created by Image Playground look terrible. Compared to state-of-the-art AI image generation from the likes of Midjourney, the Image Playground creations look two years behind. It can’t draw a lot of straight lines, and parts of the images blend together. It’s a neat party trick that it all happens on-device, and thus there are no limits to what you can do, but free unlimited garbage isn’t an enticing offer.

Also, Apple must add safeguards to Image Playground. All too easily, without any complicated prompt injection, I used it to generate an image of Adolf Hitler standing on the White House’s front lawn. If I were a mean-spirited middle-schooler, I could use it to generate embarrassing images of my classmates for bullying and harassment.

I don’t think Apple should ship the Image Playground feature, because it’s going to be a never-ending battle of stamping out fires as people figure out how to use it in unseemly ways, and for little benefit. The best images don’t even look that good.

Image Wand

Screenshot: D. Griffin Jones/Cult of Mac

Image Wand is a related but different feature integrated into Notes, Pages, Keynote and other writing apps. It can convert an existing drawing into an AI-generated image. Or it can create an image from scratch based on the writing on the page.

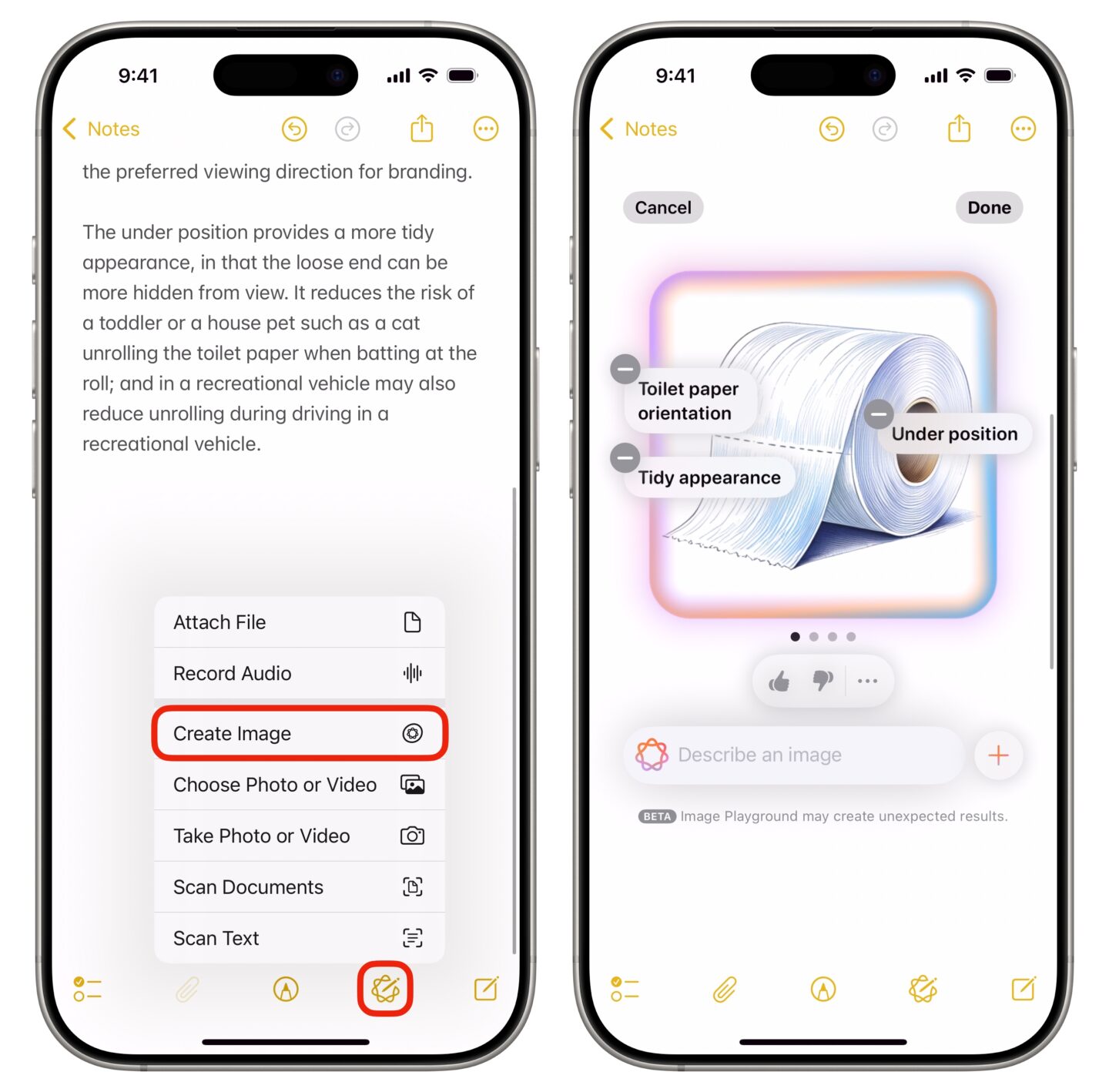

To use Image Wand in the Notes app, tap the Attachments button (paperclip icon), then tap Create Image. It’ll use the context of your notes to make an article image. Just as with Image Playground before, you can add prompts by typing in the text box, remove one by tapping on the floating bubbles, tap Done to save the image or Cancel to remove it.

Screenshot: D. Griffin Jones/Cult of Mac

If you have a rough drawing or sketch in your note already, you can convert it into an AI image. Just tap the Markup button (pen icon), then tap the new Image Wand tool. Circle part of a drawing to use it as input, then type in your own description of the image and Image Wand will generate something new. Image Wand uses a new style by default, Sketch. But if you want to use Animation or Illustration, tap the + button to switch.

It seems to take a broad sort of inspiration from your sketch, but often deviates from it, putting much more weight into your description. You can write a completely unrelated description to your drawing and it’ll just draw that instead. Which, in my opinion, defeats the purpose.

I can see some use for this tool on the iPhone, where at best you can only make rough sketches with your finger — as long as you’re OK with the ugly generative art that Image Wand makes. But if I owned an iPad and an Apple Pencil, I don’t think I’d see the point. Image Wand doesn’t enhance your drawing so much as it paints over it. No matter how much detail you add, the AI will still ignore it.

Genmoji

Screenshot: D. Griffin Jones/Cult of Mac

If you’ve ever been irritated because there’s no perfect emoji for some occasion, worry no more. With the new Apple Intelligence feature called Genmoji in iOS 18.2, you can create your own.

To use it, switch to your iPhone’s emoji keyboard and tap the New Genmoji button to the right. The tool is also cleverly built into the keyboard’s emoji search field — if your search returns no results, you can tap Create New Emoji to jump right in.

Then you can type in a simple one- or two-word prompt describing what you want, like “ostrich,” “tablet” or “tissue box.” You don’t need to jam-pack it with a long, complicated prompt — it’s already trained on the visual style of Apple emojis.

Screenshot: D. Griffin Jones/Cult of Mac

Genmoji is really great at generating emoji of specific dog breeds. If your dog doesn’t resemble any of the standard emoji — 🐶🐕🐩🦮🐕🦺 — you’ll be glad that you can create a Bernese Mountain Dog emoji, a pitbull emoji, a greyhound emoji and so much more. Same with cats: You can make a calico cat, a Maine Coon, a tuxedo cat, etc. Genmoji can help out with animals that are underrepresented in emoji, like birds, lizards, snakes and more.

The tool either comes up with something that looks absolutely perfect or totally fails to draw it correctly. The shovel emoji and the tissue box emoji look spot-on with Cupertino’s graphic design. I tried to create a trombone emoji, and it drew abominable crimes against brass instruments — including, somehow, a violin.

It works with people, too. Type in someone’s name from your photo library and you can create an emojified version of that specific person. You can depict them laughing, smiling, frowning and more. These look all right — I think this is a much better use the technology than Image Playground.

Visual Intelligence

Screenshot: D. Griffin Jones/Cult of Mac

The new Apple Intelligence feature known as Visual Intelligence provides a quick and easy way to find information on an object in the real world. Apple built Visual Intelligence exclusively for iPhone 16 owners. You can click and hold the new Camera Control on those devices to open the visual look-up tool. Click the Camera Control again, or tap one of the on-screen buttons, to look up something.

The following two options are always available:

- Ask will send the picture to ChatGPT. OpenAI’s chatbot might be able to explain what you’re looking at, and you can ask it follow-up questions for more information. Trying this out with a bunch of weird objects around my office, I came away pretty impressed by what ChatGPT got right, but of course I caught a few mistakes. You can’t entirely trust ChatGPT as your sole source of information; you should always fact-check for something important.

- Search uses a Google reverse-image search to identity the object. This proves useful if you want to find a product or object online.

Visual Intelligence’s other smart features are more context-dependent:

- You can point the camera at something with event information on it, like a poster or document, and quickly add the event to your calendar. If it’s a music festival or concert, the tool might match it to an event and fill in details. My testing for this so far yielded spotty results, but I haven’t really been able to test it thoroughly. It could become super-handy.

- Take a picture of a restaurant, and Visual Intelligence will reference your location with Apple Maps information to look up the restaurant or business you’re looking at. You can see a phone number, website, menu and more. This seems like it could be incredibly handy. If you’re walking down the street deciding where to eat, you might be able to quickly get information without manually searching for every name you see.

Technically speaking, none of these features are super-smart. ChatGPT and Google Reverse Image Search are both third-party services. Pulling information out of the iPhone camera like events and phone numbers is based on Live Text, a feature the iPhone has had for years. And Visual Intelligence does not use AI to do much when identifying businesses; it’s mostly using the iPhone’s GPS and compass with Apple Maps — any iPhone can do that.

However, in practice, they’re still useful, practical features. I just think that if Apple Intelligence wasn’t a big marketing and branding push, Apple would be more up-front about this being a convenient repackaging of different third-party services, rather than framing it as a new AI technology.

ChatGPT integration with Siri

Screenshot: D. Griffin Jones/Cult of Mac

In iOS 18.2, Apple Intelligence seamlessly integrates ChatGPT into Siri. If you ask Siri a general world knowledge question, instead of showing you Google search results, it will ask if you want to ask ChatGPT. Tap Ask if you want to pass along your request.

You also can ask ChatGPT about what you’re looking at. You can ask Siri, “What is this?” while you’re looking at a photo, reading an article, browsing the web or watching a video. It’ll send ChatGPT a screenshot (with your permission), analyze it, and provide an answer.

While a large language model like ChatGPT can only provide an educated guess at best, and Apple reminds you to “Check important info for mistakes,” this feature can deliver helpful information.

Screenshot: D. Griffin Jones/Cult of Mac

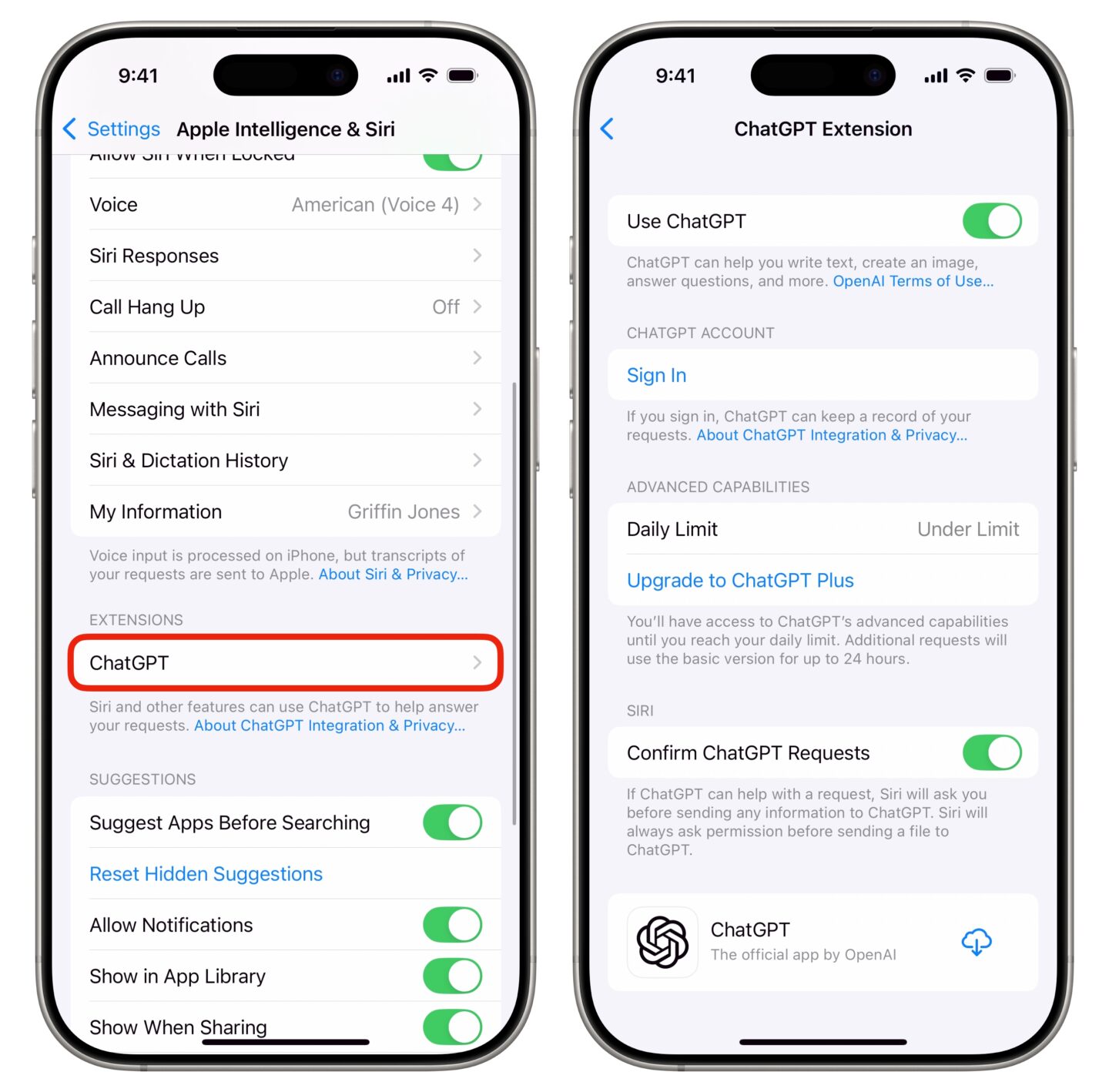

If you plan on using ChatGPT frequently, check out Settings > Apple Intelligence & Siri > ChatGPT where you will see some options. Turn off Confirm ChatGPT Requests if you don’t want to be asked permission to send your question to ChatGPT every time.

Anyone will be able to use ChatGPT for free, without creating an account, with a limited amount of daily usage. If you have a ChatGPT account and want greater access, or to keep a history of your questions, you can tap Sign In. You can also Upgrade to ChatGPT Plus directly from this page for $19.99/month.

Improved Apple Intelligence Writing Tools in iOS 18.2

Screenshot: D. Griffin Jones/Cult of Mac

Not all the Apple Intelligence features in iOS 18.2 are new. Writing Tools — which offered nine tools for proofreading, rewriting and formatting in iOS 18.1 — adds new capabilities in 18.2.

Now, you will see a text box where you can Describe your change if you want something unique. You can ask Writing Tools to make significant changes to a block of text, in weird and wonderful ways. You can try things like “Make it sound Shakespearean” or “Make everything opposite” (which usually leads to funny results). You can even give precise directions, like “Make everything title case” or “Make every verb italicized,” which genuinely impressed me.

There’s also a Compose button at the bottom. Powered by ChatGPT-4o, this addition to Writing Tools in iOS 18.2 lets you ask the AI to write something from scratch based on a prompt. From the dropdown menu above the keyboard, you can choose how much context you want to send it based on what you’ve already written. You also can tap + to share a file or image.

Composing with ChatGPT might take a moment or two, depending on your internet connection, as it doesn’t run on-device. But it’s widely considered best-in-class for writing.

New Siri APIs

This latest beta includes the first new Siri feature available for third-party apps. Siri will be able to see what’s on your screen, so you may be able to ask it questions like, “What should I title this presentation?” “What’s this website I’m on?” “What else should I add to this email?” and more.

This is one small step towards the new Siri, but at this stage, it’s a behind-the-scenes change. This is highly dependent on whether developers update their apps to use it. At the time I’m testing this, it doesn’t look like Apple’s own apps are using these APIs yet. We’ll have to see

More Apple Intelligence

Check out other Apple Intelligence features: