If you haven’t looked at any of Apple’s accessibility features because you’re not blind or deaf, and don’t think they would make your life easier, you might be surprised.

Apple built a handful of accessibility features into iOS 17 that let people with various disabilities use the iPhone in new and unexpected ways. However, absolutely anyone can take advantage of these tools, which prove surprisingly helpful in certain situations.

You can already get live captions to watch videos silently, lock your phone into one app to keep people from snooping around, play soothing ocean or forest sounds and more.

In iOS 17, five accessibility features take things even further. Assistive Access simplifies your phone to its bare features to make it easier to use; Live Speech and Personal Voice let you type on the keyboard to speak using your own voice; Detection Mode and Point and Speak help you get around using your iPhone camera.

Our hands-on demo will show you what these features can do for you.

Hands-on with 5 new accessibility features in iOS 17

Apple previewed these five new accessibility features earlier this year for Global Accessibility Awareness Day. Now that we’re digging into the iOS 17 developer betas, we finally get a chance to see how they work.

Watch all of these features in action in this video:

Keep in mind that Apple has not yet released iOS 17 to the public. Since this is a beta version of the software, things could change as Apple’s engineers tweak iOS 17 before its final release.

You can get the latest developer beta by turning on beta software updates in Settings. Beware, though: Beta software is unstable, can drain your battery more quickly and carries the risk of data loss. We can expect a public release of iOS 17 this September if you can wait.

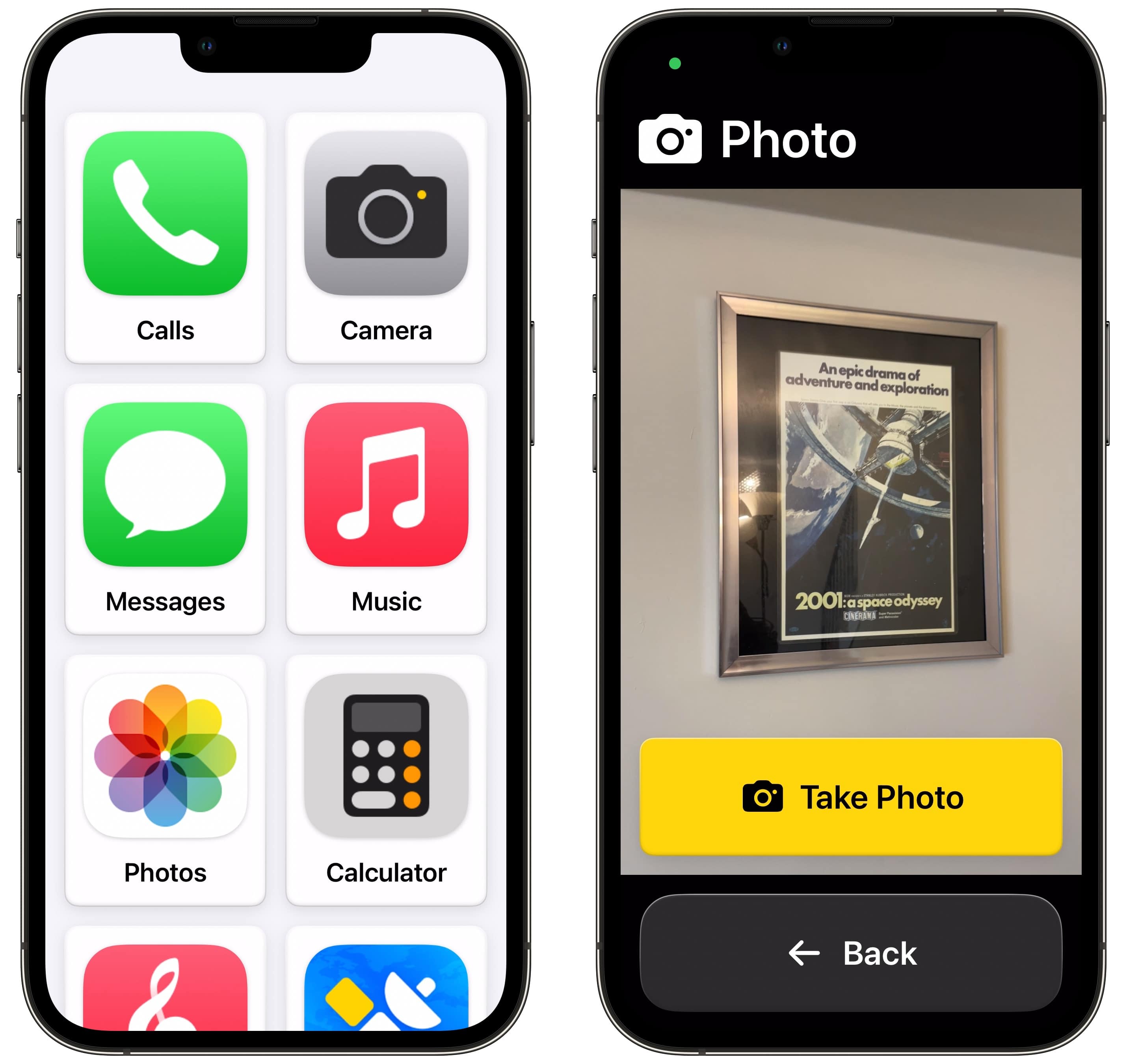

No. 1: Assistive Access

Screenshot: D. Griffin Jones/Cult of Mac

The Assistive Access feature simplifies some of the standard iPhone apps down to their most basic functions. It limits the cognitive load while keeping loved ones in touch with a modern device.

I remember watching TV ads for cellphones like the Jitterbug — flip phones marketed at older users that came with giant numbers and limited, easy-to-use features. Assistive Access feels like a reimagination of that for 2023.

Screenshot: D. Griffin Jones/Cult of Mac

If someone you know would be overwhelmed by the full features of a smartphone, you can turn on Assistive Access to make an iPhone easier to use. It transforms the iPhone user interface, with big buttons for all the main features like Messages, Calls and Camera.

With this feature activated, taking pictures, making calls and sending messages becomes much more straightforward. And you can still enable all the normal apps from the App Store if you need to install something else, like a health monitoring app.

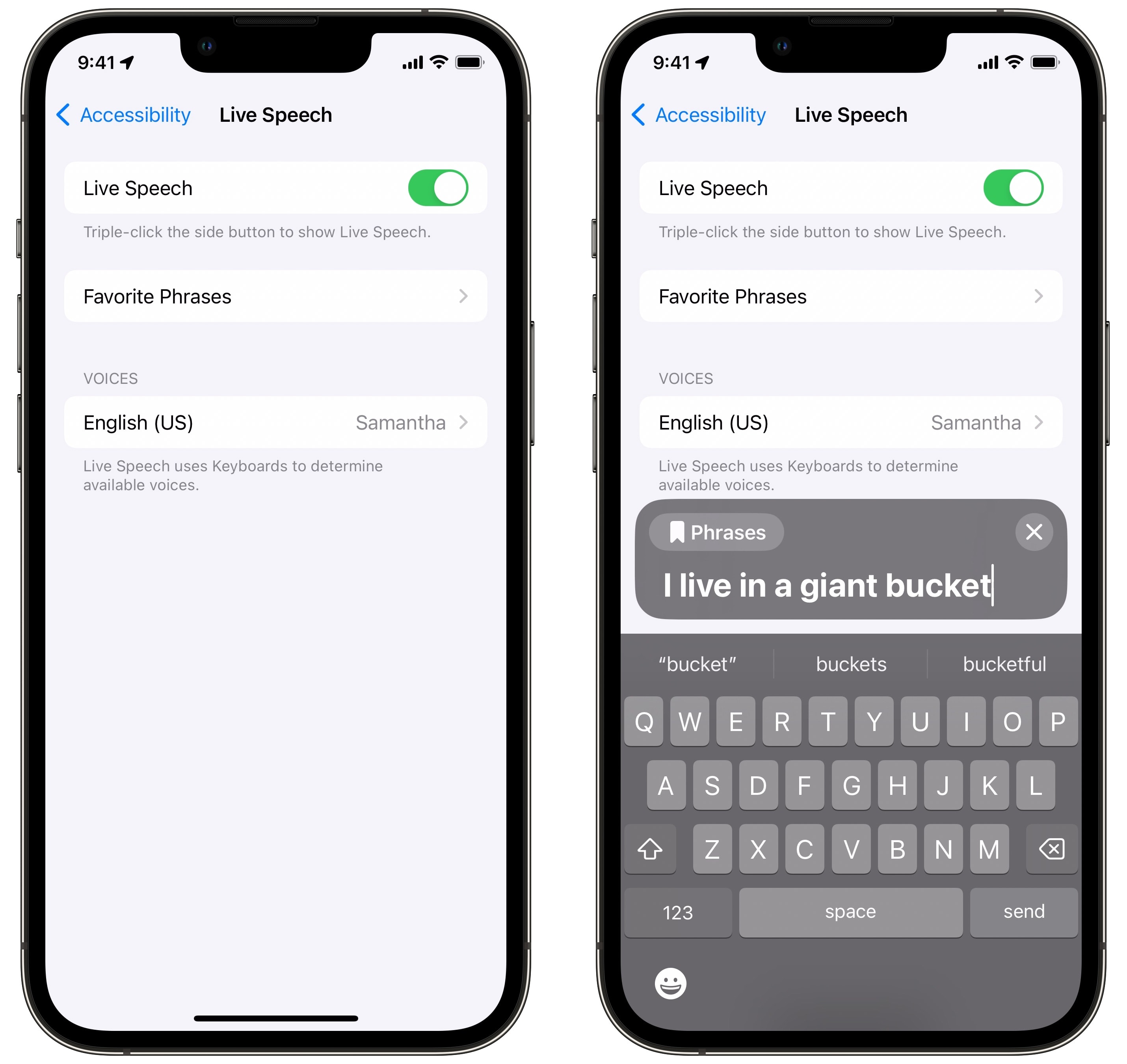

No. 2: Live Speech

Screenshot: D. Griffin Jones/Cult of Mac

If you’re losing your voice, Live Speech gives you the ability to turn text into speech, either in person or on a call. To find the feature, go to Settings > Accessibility > Live Speech (toward the bottom). Turn on Live Speech and tap Favorite Phrases to make a few shortcuts for phrases you say often.

After that, you can Activate Live Speech by triple-clicking the iPhone’s side button or from the Accessibility shortcut in Control Center.

Then just type using the keyboard in the popup, and press Send for your iPhone to speak aloud. For longer phrases, it’ll highlight word by word as it’s speaking. Tap Favorite Phrases to access your shortcuts.

No. 3: Personal Voice

Screenshot: D. Griffin Jones/Cult of Mac

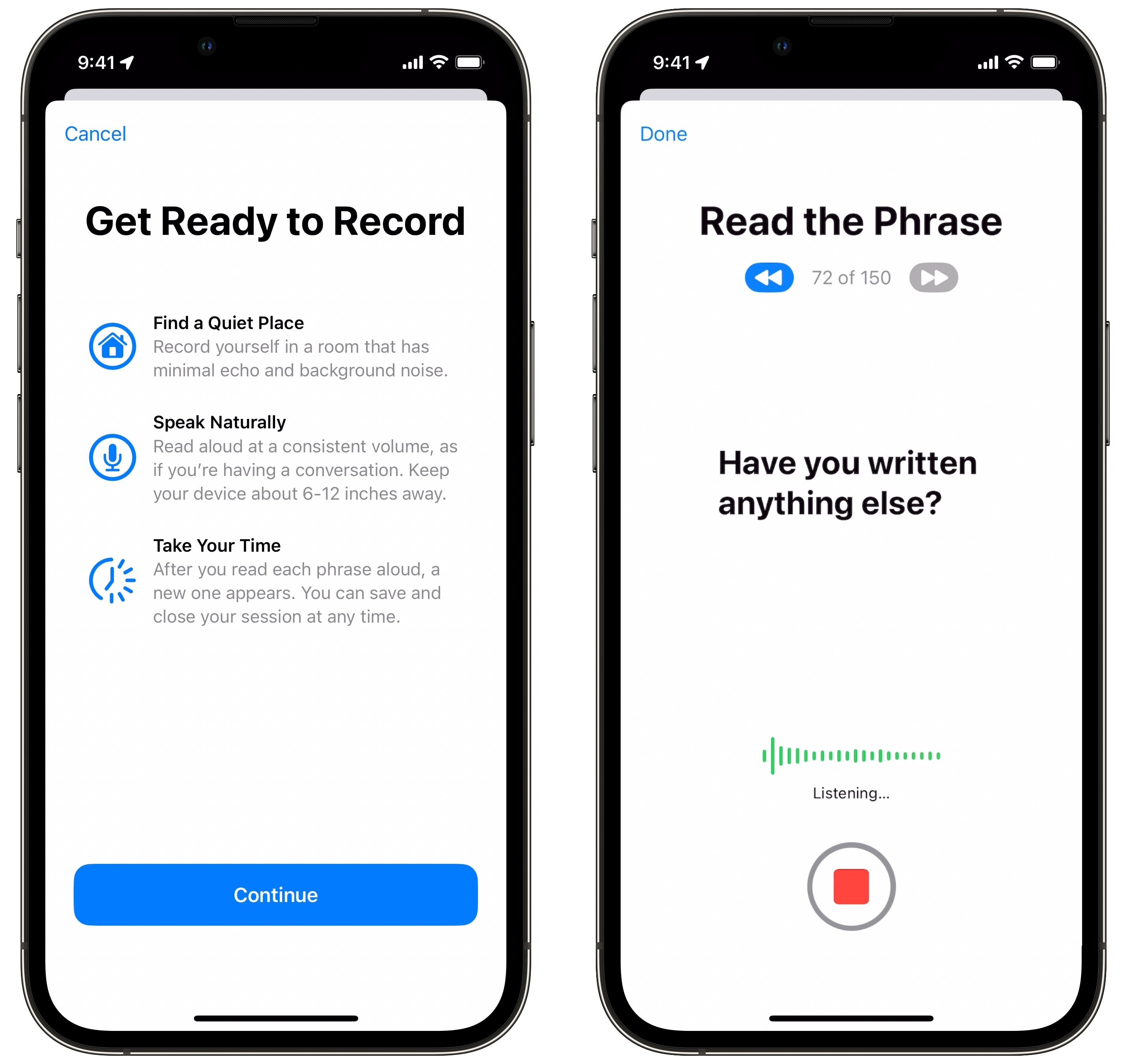

The Personal Voice feature builds on Live Speech by letting you use your own voice. You just need to take some time to read a bunch of phrases out loud, and your phone will be able to re-create your voice later on. Apple devised Personal Voice for users “at risk of losing their ability to speak — such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability.”

However, as with any accessibility feature, anyone can use it. To do so, go to Settings > Accessibility > Personal Voice and tap Create a Personal Voice. You’ll need to find a quiet space and keep your phone held about 6 inches from your face as you talk into the phone. This can take 15 minutes or up to an hour, according to Apple.

Once you finish setting up Personal Voice, you need to let your iPhone stay plugged in for a while as it churns through the recordings and creates a synthesized re-creation of your digital voice. Then, you’ll see Personal Voice as an option under Live Speech.

In practice, I can definitely tell that it’s using the recordings of my voice. It sounds somewhat like me, but it doesn’t sound as emotive and expressive as some of the newer advanced voices for Siri. Take a listen for yourself.

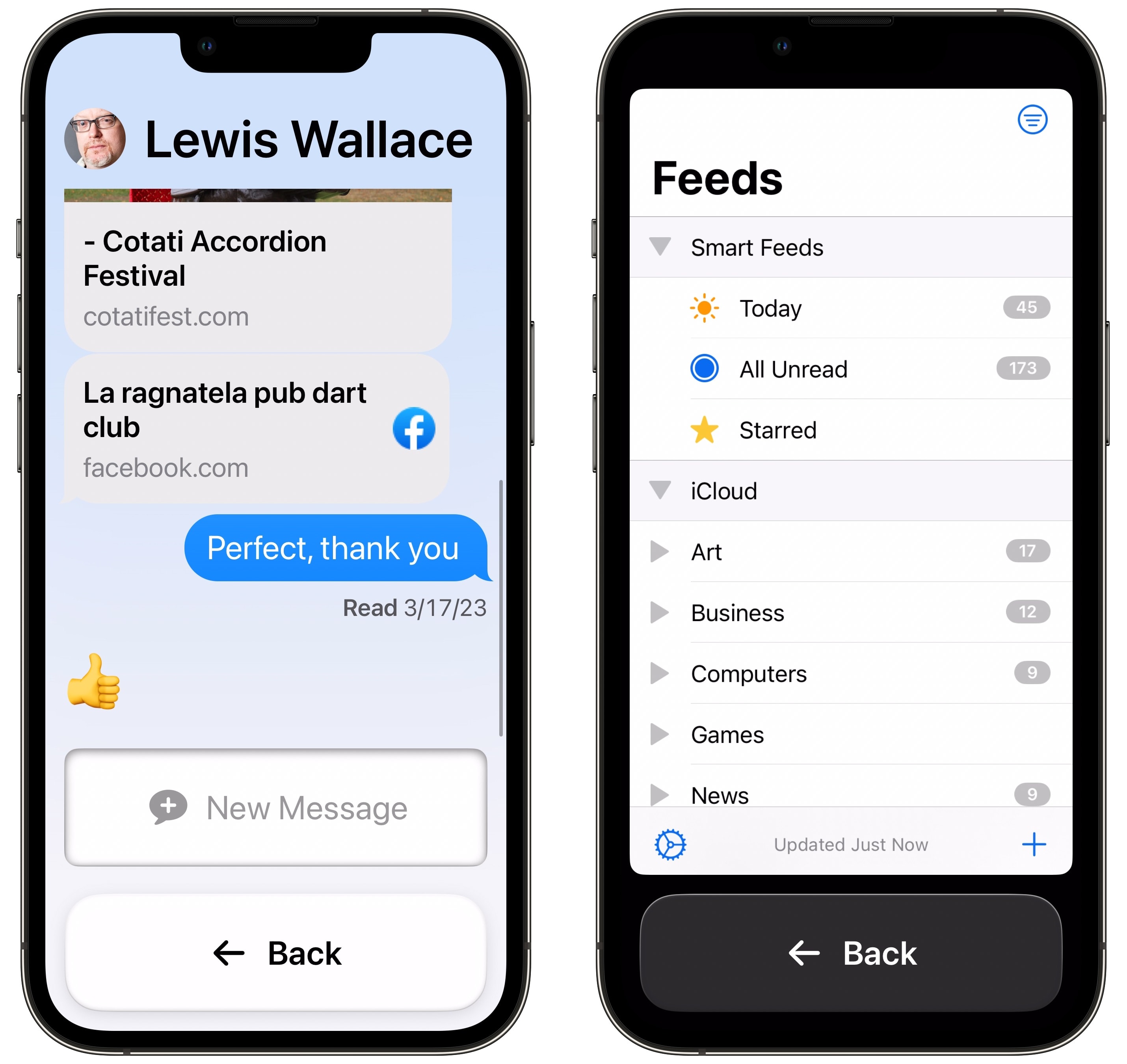

No. 4: Detection Mode

Screenshot: D. Griffin Jones/Cult of Mac

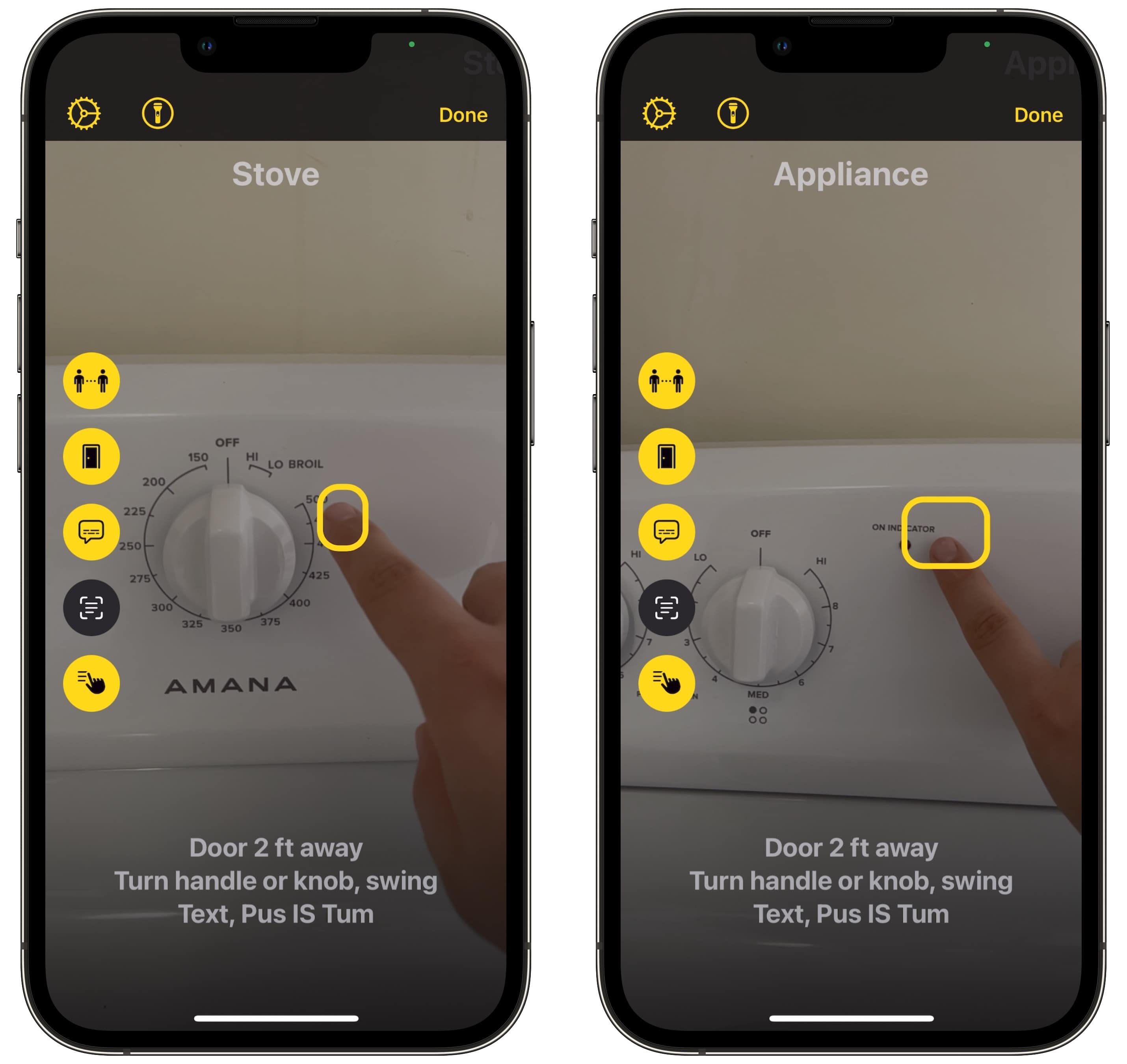

With Detection Mode, you can use your iPhone camera to identify things around you like people, doors, appliances and other objects. It’s available inside Apple’s Magnifier app, which you can download free from the App Store if it’s not already on your iPhone.

To use Detection Mode, open the Magnifier app and tap the Detection button on the right — it looks like a square. Along the left, press the icons to turn on detection features:

Screenshot: D. Griffin Jones/Cult of Mac

- People Detection will show you how close you’re standing to someone else.

- Door Detection will tell you how close you are to a door, how to open it, what’s written on it and various other attributes. Haptic feedback and clicking noises get faster and louder as you get nearer.

- Image Descriptions will tell you what your camera is pointed at using object detection. This has mixed results for me — if you live in a house with wood floors, be prepared for “Wood Processed” to stay on the screen the entire time. Sometimes the feature got stuck during my testing, not updating for a while as I pointed it around the room. But when it works, it works really well.

No. 5: Point and Speak

Screenshot: D. Griffin Jones/Cult of Mac

Point and Speak works in tandem with Detection Mode. In Detection Mode, tap on Point and Speak — the bottom icon. Then you can hold your hand out, point at something, and have your iPhone read to you.

If you find markings on your oven dial hard to see, for example, your iPhone can read the numbers to you so you don’t burn your roast — or your house. Your hand needs to be extended quite clearly in front of you, but Point and Speak works pretty well in my limited testing.

More iOS 17 features and coverage

Stay tuned as we continue to cover new features coming in iOS 17: