I have an admission: Though I frequently review new Apple products, I don’t always buy them. Like many of you, I can’t afford to update every bit of Apple hardware every time the company revises one of its products. So I have to carefully measure when my stuff has now become too old and needs to be replaced with the shiny and new.

Of course, Apple would love us all to buy new stuff all the time. But the company has to earn its sales the hard way. I might buy a new iPhone because of an upgraded camera or a new MacBook Air because of a new design and a faster processor. I might bypass the latest Apple Watch because the new features just don’t matter to me.

As the heat from the iPhone’s huge acceleration of growth begins to cool down and iPad and Mac sales drop from their pandemic-driven heights, Apple is looking for reasons to sell new hardware. And now, it may have found a big one in a somewhat unexpected place: AI.

AI models eat RAM

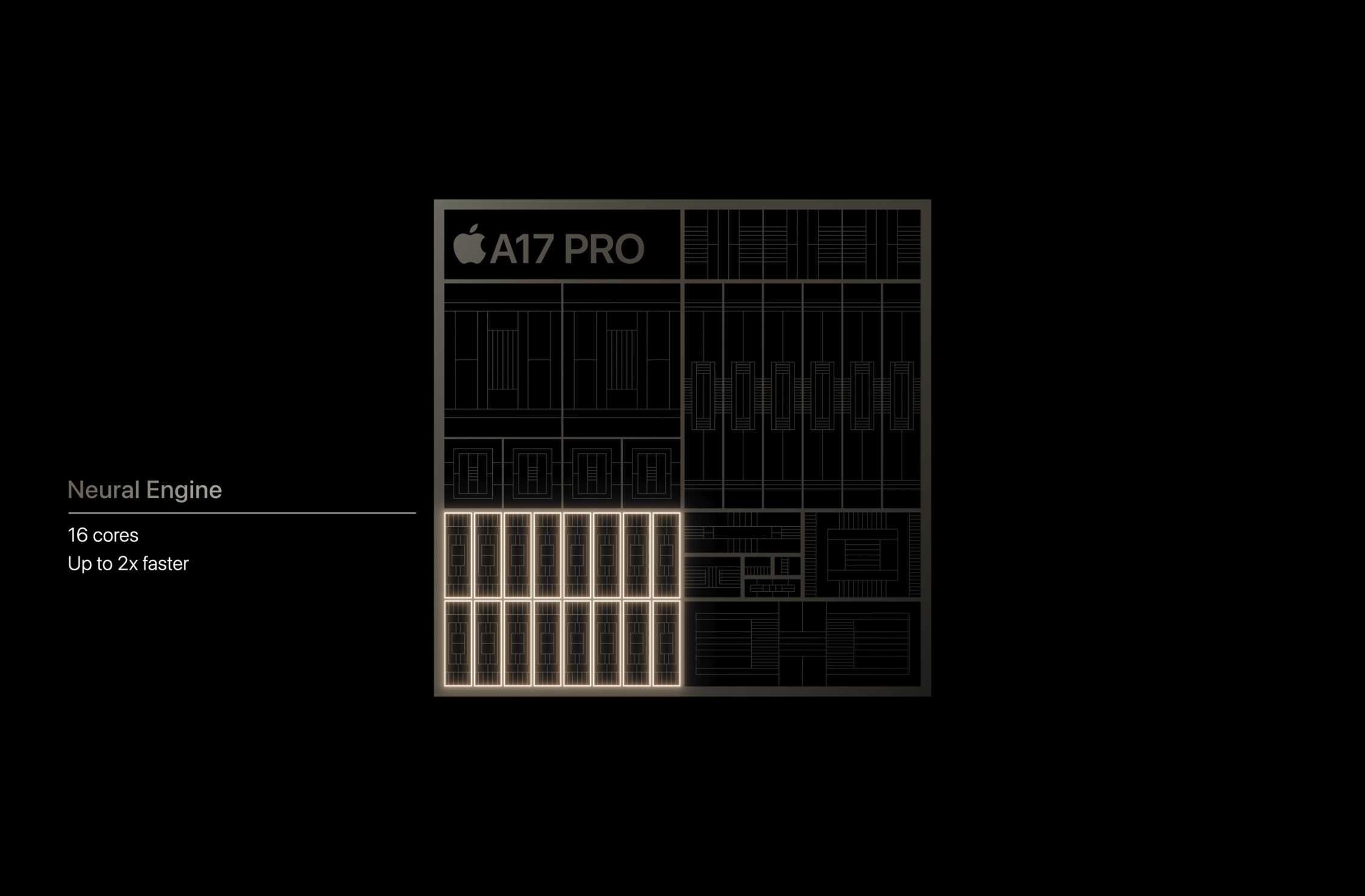

Artificial Intelligence algorithms are software, of course. Theoretically, all current Apple hardware should be able to run AI stuff. Apple’s been building Neural Engines into its chips for years, for example. And yet the rumored addition of major AI features to Apple’s platforms starting this fall may fuel a new wave of upgrades.

This is because when we discuss AI these days, we’re largely discussing Large Language Models (LLMs), things like OpenAI’s Chat GPT and Google’s Gemini. Apple is reportedly building its own LLM, intending it to run natively on Apple devices rather than being outsourced to the cloud. This could increase speed dramatically, as well as enhance privacy.

But here’s the thing: LLMs really need memory. Google has barred Gemini Nano, a model likely quite similar to what Apple is planning for the iPhone, from all but the largest Google Pixel phone, seemingly because of memory limitations.

The most RAM ever in an iPhone is the 8GB of memory in the iPhone 15 Pro and Pro Max. While iOS has proven generally to be better at managing memory usage than Android, that’s still a relatively small amount of RAM, and would seem to be the bare minimum capable of running an on-device LLM of the kind Apple and Google are working on.

Given that Apple reportedly will unveil its AI efforts at WWDC in June, it can’t really show off iPhone features that don’t work on any current models. But it’s not unreasonable to assume that many of the iOS AI features might be limited to iPhone 15 Pro models–because they’re the only ones with 8GB of memory. (A new line of iPhones in the fall would presumably all ship with sufficient memory.)

Neural Engines have been part of Apple silicon for a while, but is it enough to address the needs of generastive AI?

Apple

And just like that, Apple’s AI announcements may provide a huge set of features to motivate prospective buyers. Want to use Apple’s most awesome new AI features? Unless you’ve just bought the highest-end iPhone, you’ll need to upgrade.

One step beyond

On the Mac, things will probably be a little easier. Macs are beefier than iPhones, and it’s likely that most Apple silicon Macs will do well with an Apple-built LLM, though even there it may be the case that M1 Macs will lag a bit behind the M2 and M3 versions.

Still, I’m starting to think that the most compelling reason that someone who owns an Apple silicon-based Mac might have to upgrade will be the slow processing of AI models, which can demand lots of memory and many GPU cores. I’m a big fan of M1 Macs, including the low-cost M1 MacBook Air, but Apple’s next-generation AI features may make the M1 feel old.

Then there’s the Apple Watch. Its hardware just got upgraded to support on-device Siri for the first time, which suggests that it might be a while before it’s got enough oomph to support an on-device LLM. But the more I think about it, the more I realize that I would upgrade my Apple Watch in a heartbeat if I could get access to a better, more responsive voice assistant.

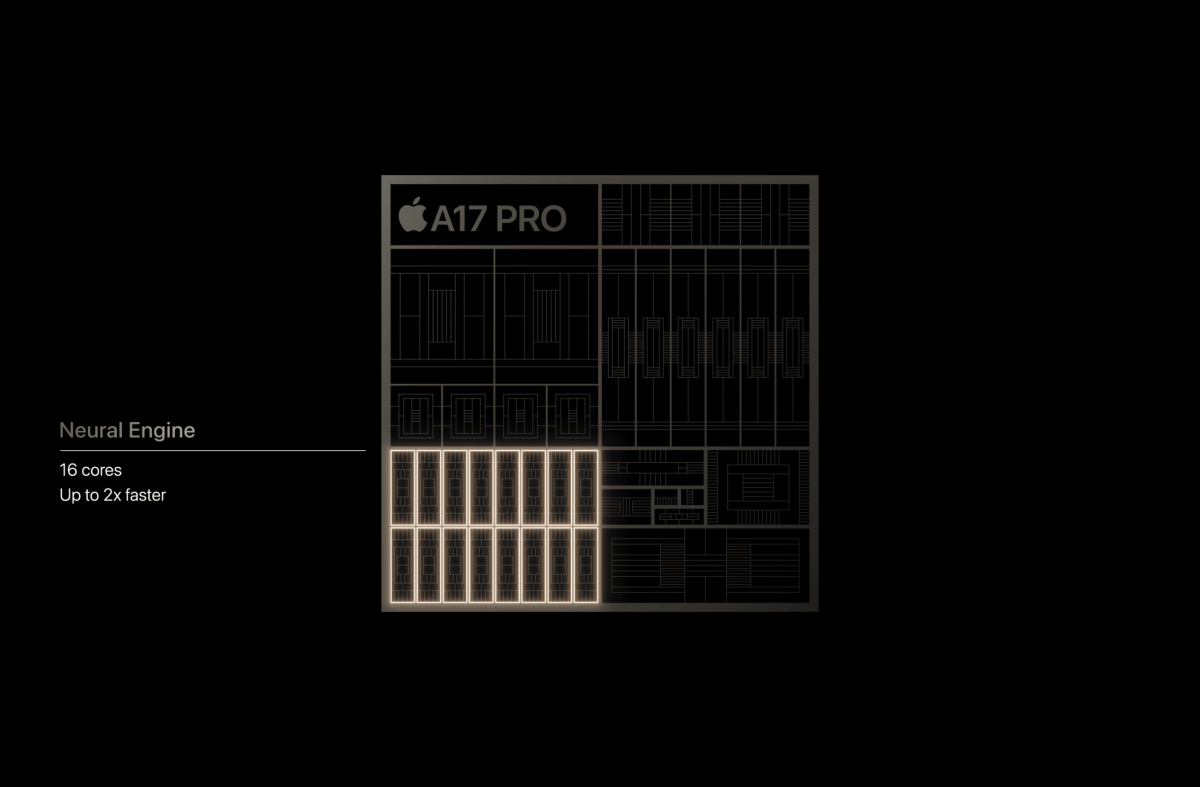

Apple sold a ton of M1 Macs, but it’s possible that it may not be powerful enough (or at least won’t have enough memory) to handle on-device AI processing.

Quelle: Apple

Of course, it’s still incumbent on Apple to ship AI features that people want. One of Apple’s most steadfast traits through the years is the company’s ability to take cutting-edge technology and build it into features that users actually value. Shipping an LLM and other AI features will not be a cure-all–they need to be built into functionality that people will want to actually use.

But if Apple can manage to infuse AI into its operating systems in ways that make them more appealing, and by happy coincidence, it requires faster processors and more memory, that’s going to motivate a round of hardware upgrades. And that’s good for Apple, because while OS updates are free, new iPhones absolutely are not.

I’m not thrilled about the idea of replacing my Apple hardware, but I’d rather do it because I’m motivated by an awesome AI-based feature than because I’m tired of the color of my laptop or the shape of my iPhone.