Apple’s Vision Pro headset heavily relies on hand tracking for controls and interactions from users. This function allows for a more immersive experience compared to other headsets that use dedicated controllers. Today, it appears the Cupertino company is planning to expand in-air hand gestures to its other devices like on Apple TV box or MacBook more than its AR/VR glasses.

Presently, the Apple Vision Pro enables eye and hand tracking through a set of cameras and sensors. These are paired with sophisticated software algorithms to allow controller-free input. In the latest patent filing of Apple (via Patently Apple), it indicates that the company will combine several devices to mimic a similar virtual experience to its Vision OS.

Do midair gestures to control an Apple TV or MacBook

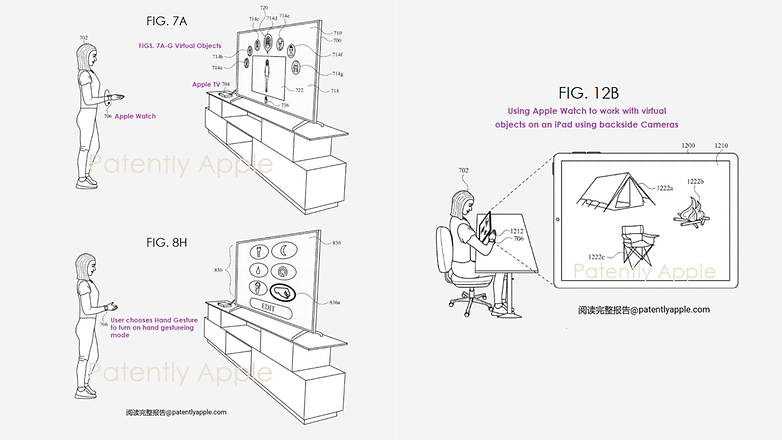

One of the illustrations included specifically shows that a user wearing an Apple Watch creates in-air hand gestures which read and detect by an Apple TV equipped with cameras and sensors. Furthermore, it is likely that the smartwatch carries extra components to cater for the different orientation and position of the hand. Subsequently, all interactions on the UI are then projected on a TV or monitor.

As with other applications, there are countless ways how hand tracking will be useful aside from the basic controls. For example, a user would be able to draw objects or compose a message in midair and without the aid of another external accessory for input.

Apart from the Apple Watch and Apple TV, it was also noted in the application that this technology is workable on a MacBook laptop and Mac desktop. It is even possible that an Apple iPad tablet will be utilized as a display and tracker given it already comes with cameras and sensors.

The idea of the patent seems very feasible if to be based on the current technologies available. Hence, we could predict that the idea of Apple would likely materialize in real-world applications in the coming years. Likewise, do you think this concept is practical and useful?