Apple Vision Pro involved a lot of neuroscience to develop some of its key features, according to a former Apple engineer, with it relying on various tricks to determine what a person could be about to do in the interface.

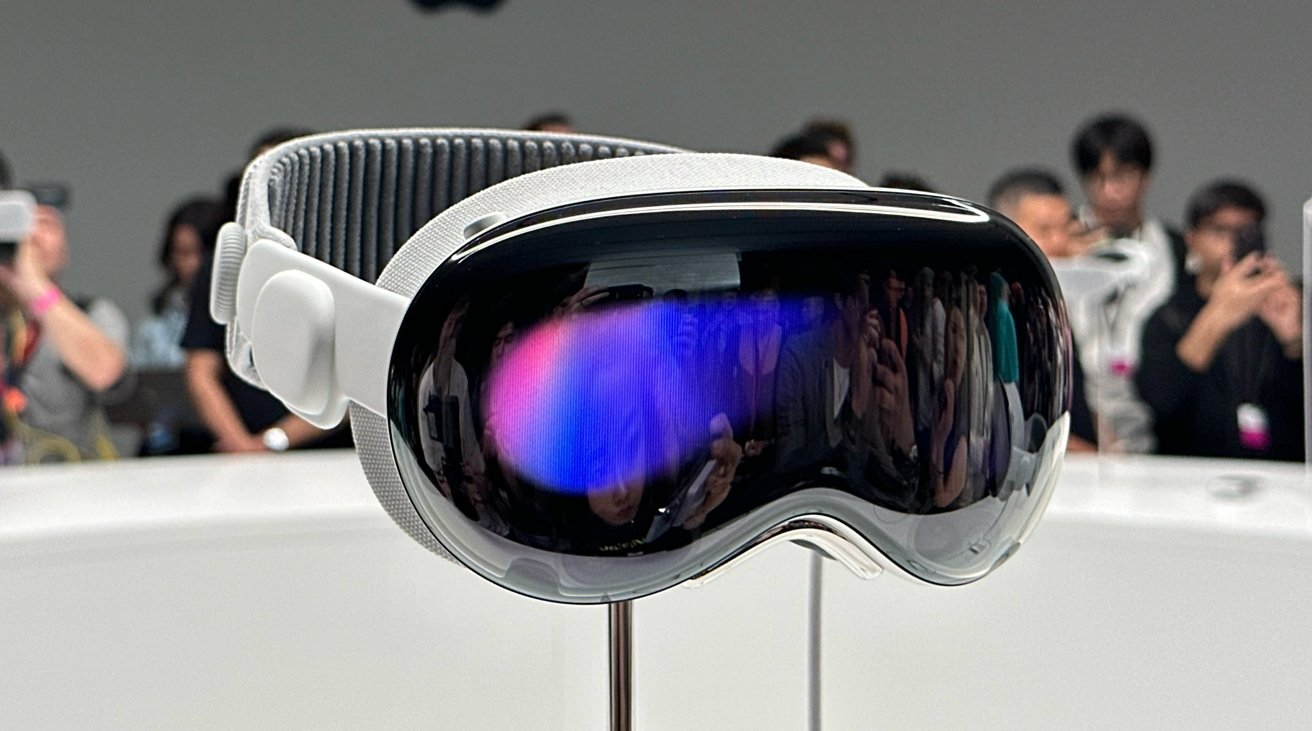

The main interface of Apple’s Vision Pro headset is based on navigating using eye tracking and gestures, with the system needing to determine what the user’s looking at specifically as quickly as possible. In a Twitter posting on Monday, the curtain is pulled to reveal some of the early thought that went into the project.

Sterling Crispin spent time as a Neurotechnology Prototyping Researcher in the Technology Development Group at Apple for over three years, the longest he’s “ever worked on a single effort.” While there, he worked on elements that supported the foundational development of the headset, including the “mindfulness experiences” and other “ambitious moonshot research with neurotechnology.”

As an example, Crispin likened it to “mind reading,” such as predicting what a user would click before they did.

“Proud of contributing to the initial vision” of the headset, Crispin was on a small team that “helped green-light that important product category,” which he believes could “have significant global impact one day.”

While the majority of his work is still under NDA, Crispin could still discuss details that have been made public in patents.

Mental states

Crispin’s work involved detecting the mental state of users based on data collected “from their body and brain when they were in immersive experiences.”

By using eye tracking, electrical activity, heart beats, muscle activity, blood pressure, skin conductance, and other metrics, AI models could try to predict the user’s thoughts. “AI models are trying to predict if you are feeling curious, mind wandering, scared, paying attention, remembering a past experience, or some other cognitive state,” writes Crispin.

One of the “coolest results” was being able to predict when a user was going to click on something before they did.

“Your pupil reacts before you click in part because you expect something will happen after you click,” Crispin explains. “So you can create biofeedback with a user’s brain by monitoring their eye behavior, and redesigning the UI in real time to create more of this anticipatory pupil response.”

He likens it to a “crude brain computer interface via the eyes,” that he would take over “invasive brain surgery any day.”

Influencing the user

In another trick to determine a state, users could be flashed visuals or played sounds in ways they do not perceive, and then measure the reaction to that stimulus.

One other patent explained how machine learning and body signals could be used to predict a user’s focus, if they’re relaxed, or to determine how well they’re learning. Using this information and state, the system could adapt the virtual environment to enhance the required state.

Crispin adds that there’s a “ton of other stuff I was involved with” that hasn’t surfaced in patents, and hopes that more will surface down the road.

While generally happy with the impact of the Vision Pro, there is still a warning that it is “still one step forward on the road to VR,” and that it could take a decade more for the industry to fully catch up.