An AI tool that decodes what mice see could enhance future brain-computer interfaces, according to a new study.

Named CEBRA, the system was developed by researchers at EPFL, a university in Switzerland. Their aim? To uncover hidden relationships between the brain and behaviour.

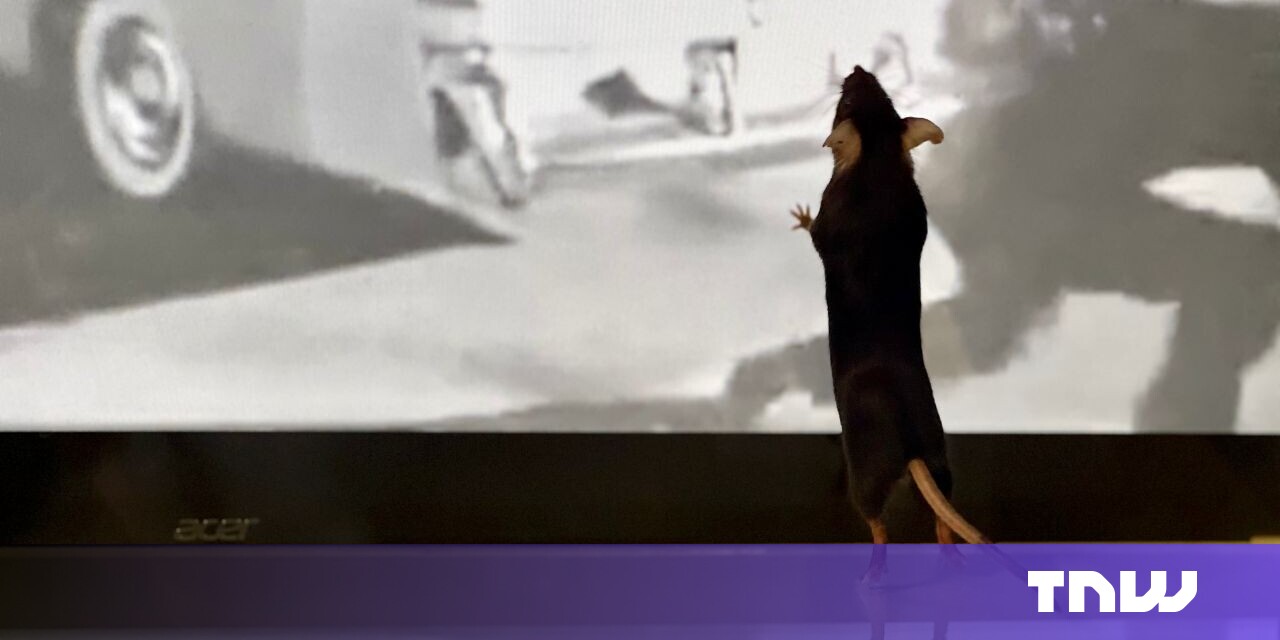

To test CEBRA (pronounced “zebra”), the team tried to decode what a mouse sees when it watches a video.

“Given the brain is the most complex structure in our universe, it’s the ultimate test for CEBRA.

First, the researchers collected open-access neural data on rodents watching movies. Some of the brain activity had been measured with electrode probes in a mouse’s visual cortex. The remainder came via optical probes of genetically modified mice, which were engineered so their neurons glowed green when activated.

Join us in June for TNW Conference & save 50% now

Make use of our 2for1 sale and bring your friend along

All this data was used to train the base algorithm in CEBRA. As a result, the system learned to map brain activity to specific frames in a video.

Next, the team applied the tool to another mouse that had watched the video. After analysing the data, CEBRA could accurately predict what the mouse had seen from the brain signals alone.

The team then reconstructed the clip from the neural activity. You can see the results for yourself in the video below:

Unsurprisingly, the researchers aren’t solely interested in the movie-viewing habits of rodents.

“The goal of CEBRA is to uncover structure in complex systems. And, given the brain is the most complex structure in our universe, it’s the ultimate test space for CEBRA,” said EFPL’s Mackenzie Mathis, the study’s principal investigator.

“It can also give us insight into how the brain processes information and could be a platform for discovering new principles in neuroscience by combining data across animals, and even species.”

Nor is CERA limited to neuroscience research. According to Mathis, it can also be applied to numerous datasets involving time or joint information, including animal behaviour and gene-expression data. But perhaps the most exciting application is in brain-computer interfaces (BCIs).

As the movie-loving mice showed, even the primary visual cortex — often considered to underlie only fairly basic visual processing — can be used to decode videos in a BCI style. For the researchers, an obvious next step is using CEBRA to enhance neural decoding in BCIs.

“This work is just one step towards the theoretically-backed algorithms that are needed in neurotechnology to enable high-performance BMIs,” said Mathis.

You can read the full study paper in Nature.