First, let’s take a look at our camera blind test results. This is how the scoring system works: The smartphone with the most votes gets five points for each scene, while the second place gets four points, and so on and so forth. The last place goes home empty. We then tally all the points and voilà! We have a winner.

Since the Google Pixel 7 Pro and Honor Magic 5 Pro were tied, we compared the total votes cast for the different smartphones here, where the Pixel 7 Pro led by approximately 400 votes.

NextPit’s camera blind test results:

Thus, the Vivo X90 Pro+ is far ahead of the competition in first place, having undoubtedly delivered excellent photos. Above all, the smartphone also showcased one thing: really eye-catching pictures.

It is not only the camera specifications that count but also photo quality: What is outstanding tends to get picked, and not necessarily what is better. Of course, this is not true for every NextPit reader, but unfortunately, it seems to be the case for the masses.

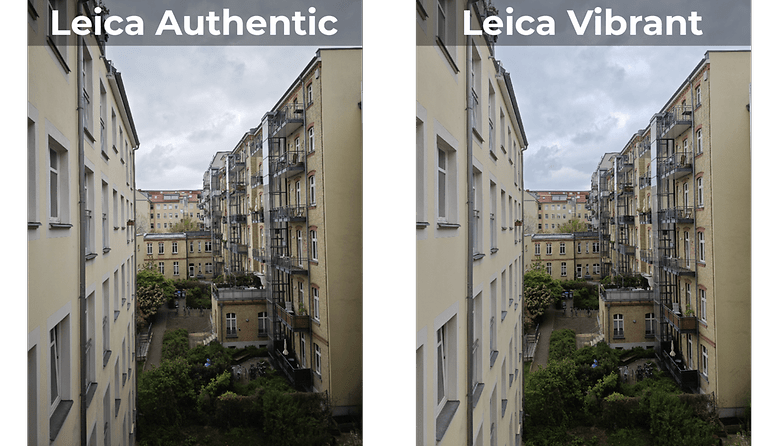

For the Xiaomi 13 Ultra, we selected the “Leica Authentic” color profile for the blind test, which strives to deliver neutral colors and natural contrasts – and is much more inconspicuous than the other “Leica Vibrant” option. The result? The Xiaomi 13 Ultra ended up in last place. The blind test might have ended differently otherwise based on the selected color profile.

The most important thing about every test, regardless of whether it is a blind test or not, is therefore: Take a look at the results yourself. Do you like the photography style of the respective smartphone or do you not like the results at all?

Scene 1: Daylight, portrait

The differences were particularly drastic for portrait photos. While Xiaomi and Vivo used a relatively strong beauty filter right out of the box, the other brands hold back with a particularly strong refrain.

In return, we find a highly exaggerated HDR effect in Honor, which was appraised by many in the blind test. The iPhone 14 Pro Max and the Google Pixel 7 Pro have the most natural results.

Scene 2: Daylight, ultra-wide angle

A dramatic win here: In this scene, the iPhone 14 Pro Max won outright with 40 percent of the votes cast – with a dramatic photo that matches the scene. Second place goes to the Vivo X90 Pro+, which wooed votes with a strong HDR effect and supernaturally saturated colors.

Third place goes to Samsung with rather peppy colors, albeit with much more natural contrasts. The other smartphones are even more neutral and have to share the remaining points.

Scene 3: Daylight, main camera

In our quest for the most dramatic look, that translated to five points for the Vivo X90 Pro+ which also offered excellent detail reproduction in this scene. Only the Honor smartphone performed slightly better in terms of details, but it is less peppy.

Third place belonged to the Galaxy S23 Ultra, which somewhat surprised me in view of the unpleasantly overdrawn micro contrasts. However, looking at it closely, it made the photo look sharper than it is.

Scene 4: Daylight, 3x zoom

The Pixel 7 Pro, the Galaxy S23 Ultra, and the iPhone 14 Pro Max occupied the first three places in this scene in this particular order. The Google smartphone sitting in first place surprised me in view of the low-detail reproduction.

However, all three smartphones sport a balanced contrast. The Honor Magic 5 Pro has an unnatural HDR effect while the Xiaomi 13 Ultra featured a reproduction that is probably too close to reality, ending up in the last two places. After all, we shot this photograph against a backlight.

Scene 5: Daylight, 5x zoom

In our fifth scene, the Xiaomi 13 Ultra ended up in first place. With very little separating the second to fourth places are Vivo, Samsung, and Google with three very different interpretations of the same scene.

Vivo is colorful and rich in detail, Samsung is colorful with extreme micro-contrasts spotted, while Google is natural and rich in detail, similar to the Xiaomi 13 Ultra.

Scene 6: Daylight, 10x zoom

In terms of 10x zoom, Samsung has the upper hand on paper with its native 10x lens, but only landed in second place here, which the Vivo X90 Pro+ having a razor thin lead. In a direct comparison, the Vivo photo is “cleaner” than the Samsung image, which, however, captures more details.

Third place belongs to the Honor Magic 5 Pro with a very similar picture effect as the X90 Pro+, but significantly worse details.

Scene 7: Mixed light, portrait

In our indoor portrait session, the Galaxy S23 Ultra made it to first place with the Honor Magic 5 Pro occupying second place. Honor in particular surprised me here with the muddy details. What do you think of the photo?

The other four smartphones are practically on par with three of them picking up eleven percent of the votes while another had nine percent (Pixel 7 Pro).

Scene 8: Mixed light, main camera

Admittedly, this scene with mixed lighting was not only a challenge for the smartphones, but also for the survey participants. The winner here was the iPhone with its high-contrast shadows on the walls.

The Galaxy S23 Ultra and the Xiaomi 13 Ultra follow in second and third place, respectively, while Honor, Vivo, and Google were practically on par in the chasing pack.

Scene 9: Mixed light, ultra-wide angle

When it comes to the ultra-wide-angle motif under mixed lighting, two smartphones are way out in front: the Honor Magic 5 Pro with 42 percent and the Galaxy S23 Ultra with 33 percent of the votes, delivering the most vivid images.

The other four devices share the remaining 25 percent of votes among themselves.

Scene 10: Mixed light, 5x zoom

Our editorial dog Luke was best captured by the Vivo X90 Pro+ according to 25 percent of our votes. The Pixel 7 Pro received 23 percent of the votes, which surprised me somewhat considering the muddy details.

The Vivo really outperformed Google by leaps and bounds in terms of fine details. Clear differences were also visible in the white balance. If it does not work properly, the cuddly pillow is colored blue by the much colder outdoor light.

Scene 11: Night, portrait

There are two winners in the night portrait session using two completely different approaches. The Google Pixel 7 Pro delivered a reasonably realistic result, noise included.

The Vivo X90 Pro+ is quite different. It also delivered a rather bright photo, but mercilessly flattened any noise and all details in the face. The Xiaomi 13 Ultra follows in third place with a similar result to the Pixel. Samsung, Apple, and Honor are far behind.

Scene 12: Night, ultra-wide angle

The ultra-wide angle scene at night has a clear winner: the Vivo X90 Pro+, which simply “pops up” here. The Galaxy S23 Ultra and the iPhone 14 Pro Max are neck-and-neck in 2nd and 3rd place, with the Samsung smartphone offering the best detailed reproduction of the top 3.

Scene 13: Night, main camera

Same subject, different lens: in your opinion, this house on the former inner-German border looked best when shot using the iPhone 14 Pro Max. The Vivo X90 Pro+ followed in second place, while third place belonged to the Galaxy S23 Ultra.

Scene 14: Night, 5x zoom

Finally, the night shot with 5x zoom was won by the Xiaomi 13 Ultra. The iPhone 14 Pro Max and the Galaxy S23 Ultra followed closely behind in second and third place with, however, already significantly weaker detailed reproduction.

Scene 15: Absolute darkness

The last motif took place in absolute darkness. It was a huge challenge here to even make out our camera test chart with just the naked eye. The clear winner in this scene was the Vivo X90 Pro+.

The smartphone is the only model that actually produced a reasonably clear picture. Second place went to Xiaomi, which is still much better than the rest of the competition with the same sensor in the main camera.

Of course, we also took the blind test as an opportunity to discuss our review procedure for smartphone cameras. In every smartphone review, we shoot countless photos and try to give you the most complete picture possible concerning the camera’s performance.

However, what does it ultimately boil down to? Comparisons with the competition or as many test photos as possible? Or should we rather measure and generate other standards? How can we convey the clearest picture possible concerning the usability of the camera, which wasn’t even a part of this blind test? We look forward to your opinion in the comments!